When it comes to programming, there’s a translation process that has to occur between the code we write and the code that the computer can understand. For Python, the translation process is a bit complicated, but we can simplify it a bit by focusing on one concept: the interpreter.

In this article, we’ll talk about how computers make sense of code. In particular, we’ll cover three tools that can convert code into binary: assemblers, compilers, and interpreters. Then, we’ll get a chance to actually play with the Python interpreter before closing things out with some plans to learn more.

Table of Contents

- Computers Don’t Understand Code

- Translating All of the Jargon

- The Python Interpreter in Action

- Opening Pandora’s Box

Computers Don’t Understand Code

Up to this point in the series, we talked about a lot of interesting concepts. For example, we talked about how programmers used to have to use plugboards and toggle switches to code. Now, we defer to high-level programming languages like Python.

Ironically, however, computers don’t actually understand code—at least not directly. See, the same fundamentals we discussed before still apply: all computers understand is binary (i.e. ON and OFF). In other words, programming allows us to abstract this reality.

By writing code, we’re really just passing work off to some other tool. In Python, the tool that handles the translation is known as the interpreter. For languages like Java and C, the translation process happens in a compiler. For lower-level languages, the process skips right to the assembler.

Naturally, all of this jargon boils down to one simple idea: translation. In the same way that you need a translator when you travel to a country that uses a different language, a computer relies on a translator to be able to understand a programming language. In the next section, we’ll talk broadly about the different ways translation occurs in a computer.

Translating All of the Jargon

Previously, I had mentioned a few different translation tools—namely, the interpreter, the compiler, and the assembler. In this section, we’ll look at each of these tools to understand exactly what the do.

The Assembler

To kick things off, we’ll start will the tool that is closest to the processor: the assembler. When it comes to writing programs for computers, we can start at a lot of different levels. If we knew what we were doing, we could write code in binary directly (i.e. 00010110).

The reason this works is because computers fundamentally operate on binary. After all, a sequence of zeroes and ones is really just the instruction set for turning wires ON and OFF.

Of course, it can be really, really tedious to write in binary. After all, each processor is different, so the same 8 bits will have a different behavior.

Fortunately, someone came along and wrote a binary program to assemble binary programs. This became known as an assembler, and it allowed us to use a more human friendly syntax. Although, assembly code is hardly user-friendly by today’s standards. Take a look:

section .text

global _start

_start:

mov edx,len

mov ecx,msg

mov ebx,1

mov eax,4

int 0x80

mov eax,1

int 0x80

section .data

msg db 'Hello, world!',0xa

len equ $ - msg

Interestingly, in the world of programming, the act of using a language to build a more abstract language is called bootstrapping, and it’s the foundation of modern programming. In order to build something better, we have to use what we already have.

In this case, we created a programming language that essentially mapped simple commands directly to their binary equivalents. As a result, assembly code is specific to its hardware architecture (i.e. each new architecture features a new assembly code).

In the next section, we’ll look at a tool that allowed us to step away from assembly code altogether.

The Compiler

While assembly code was an amazing innovation in terms of programming, it still wasn’t great. After all, assembly code was never very abstract; a new assembler had to be written for every new architecture.

Fundamentally, this design was a problem because code was never portable. In other words, entire software systems had to be rewritten as new hardware architectures were built.

Naturally, the solution to this problem was to create another layer of abstraction. In other words, what if we created a language that wasn’t hardware-specific? That way, we could design a tool that could translate our new language to various architectures. That tool became known as a compiler.

Fun fact: the first compilers were written in assembly code. Apparently, one of the first programming languages written in itself was Lisp in 1962 .

.

The beauty of the compiler is that it allowed us to totally ignore underlying architecture. As an added bonus, we were able to craft entirely new languages that didn’t have to change when computing architectures changed. Thus, high-level programming languages were born (e.g. Java, C, FORTRAN, etc.).

In practice, compilers—or at least pieces of them—tend to be written from scratch for every architecture. While that might seem like it’s not alleviating the core issue, it actually pays off quite a bit. After all, when a new architecture comes along, we only have to write the compiler once. Then, any programs depending on that compiler can target the new architecture. No one has to rewrite their software anymore (for the most part…).

Of course, compilers aren’t the only way of translating code. Naturally, some programming languages opt for a more real-time approach. In the next section, we’ll take a look at one such approach known as an interpreter.

The Interpreter

Up until this point, we’ve talked about the assembler and the compiler. Each of these tools perform translation at a different level. For the assembler, its job is to convert low-level instructions into binary. For the compiler, it’s job is to convert high-level instructions into binary.

With high-level programming languages, the compiler is pretty much all we need. After all, the compiler offers us a lot of really great features like the ability to check if code syntax is valid before converting it to machine code.

Of course, one drawback of the compiler is that changes to code require an entire rebuild of the software. For sufficiently large programs, compilation could take a long time. For instance, when I worked at GE, the locomotive software sometimes took up to 3 hours to compile (though, this could just be an urban legend), so it wasn’t exactly trivial to test. Instead, the entire team depended on nightly builds to test code.

One way to mitigate this issue is to provide a way to execute code without compiling it. To do that, we need to build a tool that can interpret code on-the-fly. This tool is known as the interpreter, and it translates and executes code line-by-line.

Fun fact: programming languages that leverage an interpreter rather than a compiler are often referred to as scripting languages—although that definition is a bit contentious. The idea being that programs in these languages are meant to automate simple tasks in 100 lines of code or less. Examples of scripting languages include Python, Perl, and Ruby.

As you can imagine, being able to run a single line of code at a time is pretty handy, especially for new learners. In fact, I don’t think I would have been able to pick up Java as easily if I didn’t have access to the interactions pane in DrJava. Being able to run code snippets from Java without filling out the usual template was a lifesaver.

That said, there are some drawbacks to using an interpreter. For example, interpreting code is fundamentally slower than executing compiled code because the code has to be translated while its executed. Of course, there are usually ways to address this drawback in speed, and we’ll talk about that in the next section.

Code Execution Spectrum

Up to this point, we’ve spent a lot of time defining terminology. While this terminology is important, the reality is that software systems are never this cut and dry. Instead, most programming languages are able to leverage a compiler, an interpreter, and/or some combination of both.

For example, Python isn’t the purely interpreted language that I may have let on. Sure, there are ways to run Python programs line-by-line, but most programs are actually compiled first. When Python scripts are written, they’re usually stored in a .py file. Then, before they’re executed, they’re compiled to a .pyc file.

Unlike traditional compilation, however, the compiled version of Python isn’t binary; it’s bytecode. In the world of programming, bytecode is yet another level of abstraction. Rather than compiling directly to machine code, we can compile to a platform-independent intermediate representation called bytecode.

This bytecode is much closer to machine code than the original Python, but it’s not quite targeted at a specific architecture. The advantage here is that we can then distribute this bytecode on any machine with the tools for executing it (e.g. a Python virtual machine). Then, we can interpret that bytecode when we want to run it. In other words, Python leverages both an interpreter and a compiler.

Another cool advantage of this design is that the bytecode is universal in more ways than one. On one hand, all we have to do to make sure Python runs on a machine is to make sure we have a bytecode interpreter. On the other hand, we don’t even have to write our original program in Python as long as we have a compiler that can generate Python bytecode. How cool is that?!

All that said, it’s not strictly necessary to generate a .pyc file to run Python code. In fact, you can run Python code line-by-line right now using the Python interpreter (i.e. the Python REPL). In the next section, we’ll finally write our first lines of code

The Python Interpreter in Action

At this point, I think we’ve gotten more context around programming than we could possibly ever need. As a result, I figured we could take a moment to actually see some Python code in action.

To do that, we’ll need to download a copy of Python. Previously, I recommended getting the latest version of Python, but you’re welcome to make that decision yourself. Otherwise, here’s a link to Python’s download page.

To keep things simple, we’ll go ahead and use IDLE which comes with Python. Feel free to run a quick search on your system for IDLE after you’ve installed Python. It may already be on your desktop. When you find it, go ahead and run it.

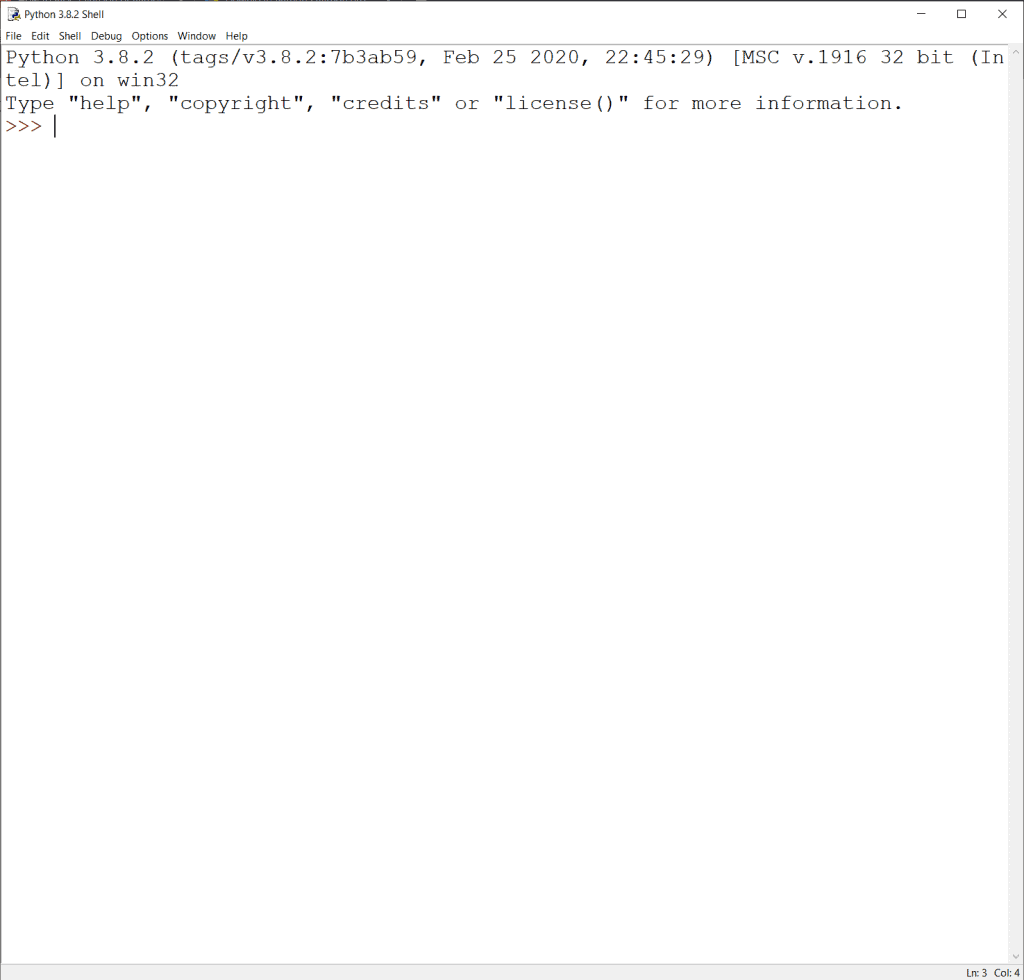

If all goes well, you should launch a window that looks like this:

What you’re looking at is a Python Read-Eval Print Loop or REPL for short. Basically, a REPL is an interpreter that runs code every time you hit ENTER (more or less). Why not take a moment to run some of the commands the tool recommends such as “help”, “copyright”, “credits” or “license()”?

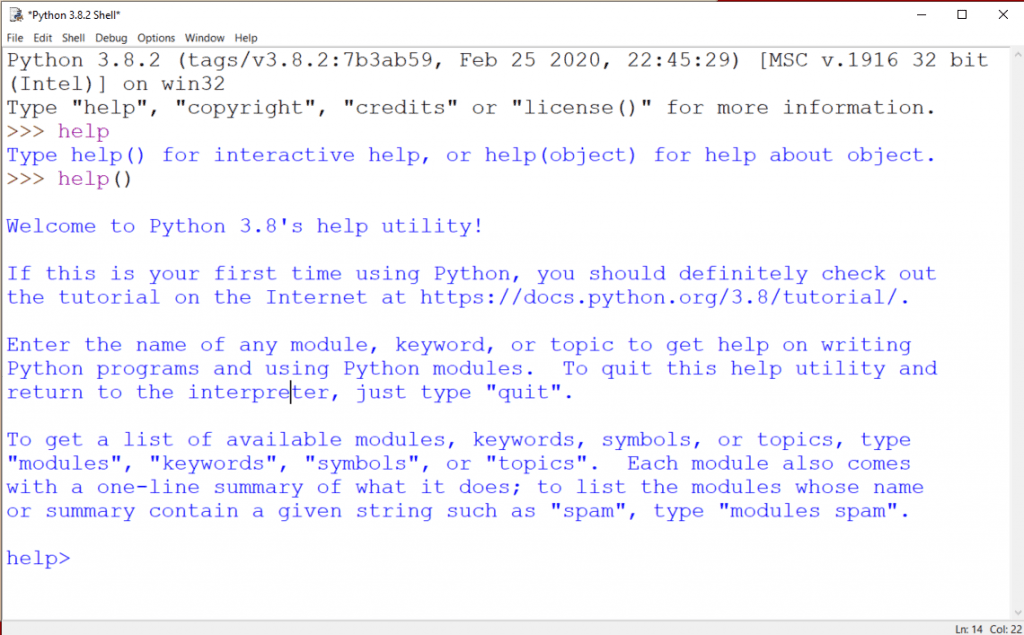

If you started with the “help” command, you probably saw something that looked like this:

If you want to get out of the help menu, type “quit”. Otherwise, take some time to explore the REPL.

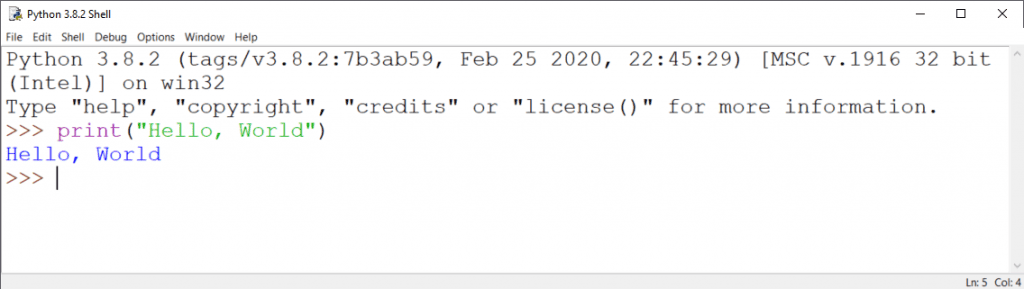

When you’re back to the Python interpreter (you’ll know when you see >>>), try typing the following:

print("Hello, World!")

Guess what? You just wrote your first Python program! If all went well, you should have seen something like this:

In other words, you managed to print “Hello, World” to the user. As we move forward in this series, we’ll learn more about what this means. For now, just know that you’ve written you first program. Give yourself a pat on the back.

Opening Pandora’s Box

By taking your first step in learning to code in Python, you’ve inadvertently opened up Pandora’s Box. Now, every step you take will open up a new world of curiosity and exploration. Tread lightly.

All kidding aside, this is really an exciting time to be learning to program. As someone picking up Python, you have a wide variety of places you can take the language. For instance, Python is used in some game development—namely Blender. It’s also really popular right now in machine learning with libraries like PyTorch, TensorFlow, and OpenCV. Likewise, I believe it’s used on the backend of some websites through tools like Flask and Django.

If you’re a weirdo like me, you’ll use the language for just about anything. After all, I like the language for what it is, not necessarily for where it’s useful. It’s really a pretty language, and I hope you grow to appreciate that as well.

Now that we’ve gotten a chance to see some the Python interpreter in action, we can finally dig into some code. Up next, we’ll start talking about Python’s language design. In particular, we’ll look at programming language paradigms and how Python supports a little bit of everything. After that, I think we’ll talk about data types.

In the meantime, why not take some time to show your support by checking out this list of ways to help grow the site. Over there, you’ll find information about my Patreon, newsletter, and YouTube channel.

Alternatively, you’re welcome to stick around with some of these cool Python articles:

- Coolest Python Programming Language Features

- Python Code Snippets for Everyday Problems

- The Controversy Behind the Walrus Operator in Python

Finally, here are some resources from the folks at Amazon (ad):

- Effective Python: 90 Specific Ways to Write Better Python

- Python Tricks: A Buffet of Awesome Python Features

- Python Programming: An Introduction to Computer Science

Otherwise, thanks for taking some time to learn about the Python interpreter! I hope this information was helpful, and I hope you’ll stick around with this series.

Recent Code Posts

In growing the Python concept map, I thought I'd take today to cover the concept of special methods as their called in the documentation. However, you may have heard them called magic methods or even...

Today, we're expanding our new concept series by building on the concept of an iterable. Specifically, we'll be looking at a feature of iterables called iterable unpacking. Let's get into it!