Recently, I was ranting about Google’s new AI Overview feature when I stumbled upon an old article of mine from 2020. Back then, I investigated keywords for some of the articles I’d written to scope out the competition. What I found was that Google wasn’t even trying to rank decent computer science content. Since my site hasn’t really grown since then, I decided to check the same keywords to see what’s going on! Spoiler alert: it’s worse somehow.

Table of Contents

- Following Up On Old Gripes With Google Search

- Reviewing “How to Check If a List is Empty in Python” Again

- Reviewing “Python Code Snippets” Again

- Contextualizing the Problem

- Key Takeaways

Following Up On Old Gripes With Google Search

As you can imagine, I reviewed the article from 2020, and I’ve realized that there are problems on Google that persist to this day. The bulk of the rest of this article will show you what I mean, but I want to share just a couple up front.

Google Ranks Duplicate Content Separately

One of the issues I’d like to bring up in particular is something I noted in the syndication section. Specifically, I talked about how if you post an article in more than one place, you should add a canonical tag back to the original, so Google won’t rank them both.

Well, you may be surprised to know that both articles for the query “how to invert a dictionary in python” are still ranked four years later. Of course, instead of them being ranked 1st and 3rd, they’re ranked 4th and 11th, respectively. Just put the main one at 4th! What the hell is Google doing?

To give Google credit, the two articles are different in the sense that one platform allows for comments and the other doesn’t. So, my guess is that the comments coupled with the domain authority of the other site has caused it to continue to outrank the original. I do think it’s stupid regardless, and I wish I could add a no-index tag to the syndicated version.

Anti-intellectual Content Is Multiplying

Also, as you’ll see shortly, I ran a search again for the “how to check if a list is empty in python” keyword, and I found that many of the same complaints I made four years ago are valid today. For instance, I said this:

In general, I wouldn’t say any of this content is bad, but it’s definitely not great. For example, one of the articles claims that you can check if a list is empty by the “not operator.” This isn’t wrong, necessarily, but it doesn’t really explain why it works.

Looking at the articles now—and there are literally dozens—the use of the phrase “not operator” is even more common. To me, that’s a bit of a problem because it doesn’t explain why the solution works. I suppose if you’re just looking to copy code you might not care, but the “not operator” here isn’t actually doing the work. I know it’s pedantic, but as someone who takes care to write educational content, it’s a bit troubling to see these types of explanations (or lack thereof) littering the search index.

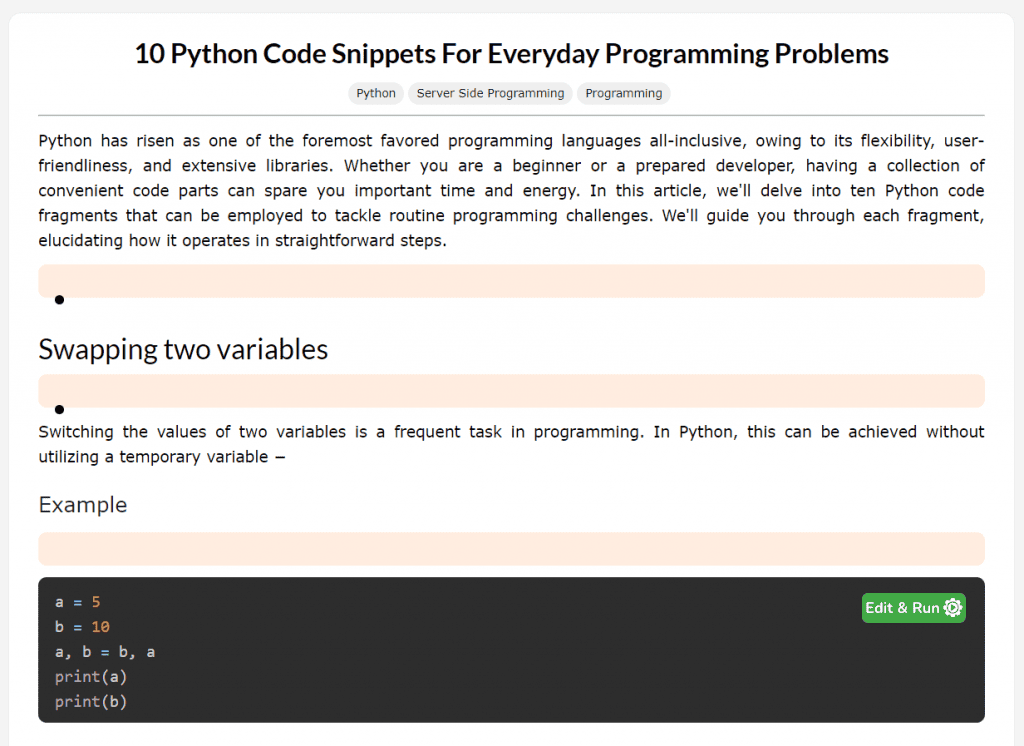

Google Does Not Rank the Canonical Article

And since we’re here, I just need to rant about Tutorials Point again. I trash talked them pretty hard in the last article for writing thin content and stealing the top rankings on what I can only assume at this point is domain authority. After revisiting some of their old work, I was almost tempted into walking back my complaints. That was until I searched up the “python code snippets” keyword for my article titled 100 Python Code Snippets for Everyday Problems, which I published on December 27th 2019. Unsurprisingly, I found our old friends, Tutorials Point, stealing my article title practically verbatim.

Part of me thought maybe it was just a common title, and I was getting a bit upset over nothing. But then I remembered, I have receipts. Not a single article in the top 6 had a title like mine back in 2020. Yet somehow, Tutorials Point has an article spawn in 2023 with almost the exact title of mine with literally no history of it in the web archive.

Imitation Is the Sincerest Form of Flattery

But wait, it gets even more ridiculous because Tutorials Point isn’t even the only offender. GeeksForGeeks also has an article with the exact same title as Tutorials Point but published almost two years earlier in 2021. And before long, I started to notice a variety of articles with variations of my title:

- 19 Python Code Snippets for Everyday Issues (2023-09-26)

- 10 Python Code Snippets for Everyday Programming Problems (2021-12-01)

- 11 Python Boilerplate Code Snippets Every Developer Needs (2023-01-12)

- 10 Most Popular Python Code Snippets Every Developer Should Know (2023-09-03)

- 14 Code Snippets That Every Python Programmer Must Learn (2023-01-05)

Meanwhile, my article isn’t even listed until rank 27. Even worse, we run into the syndication problem again where the old version of the article is the one Google has chosen to index. In other words, what you’ll see in search is “71 Python Code Snippets for Everyday Problems” on dev.to. I am amazed Google still manages to mess this up.

With that rant out of the way, let’s take a slightly deeper dive into the pair of queries we explored last time.

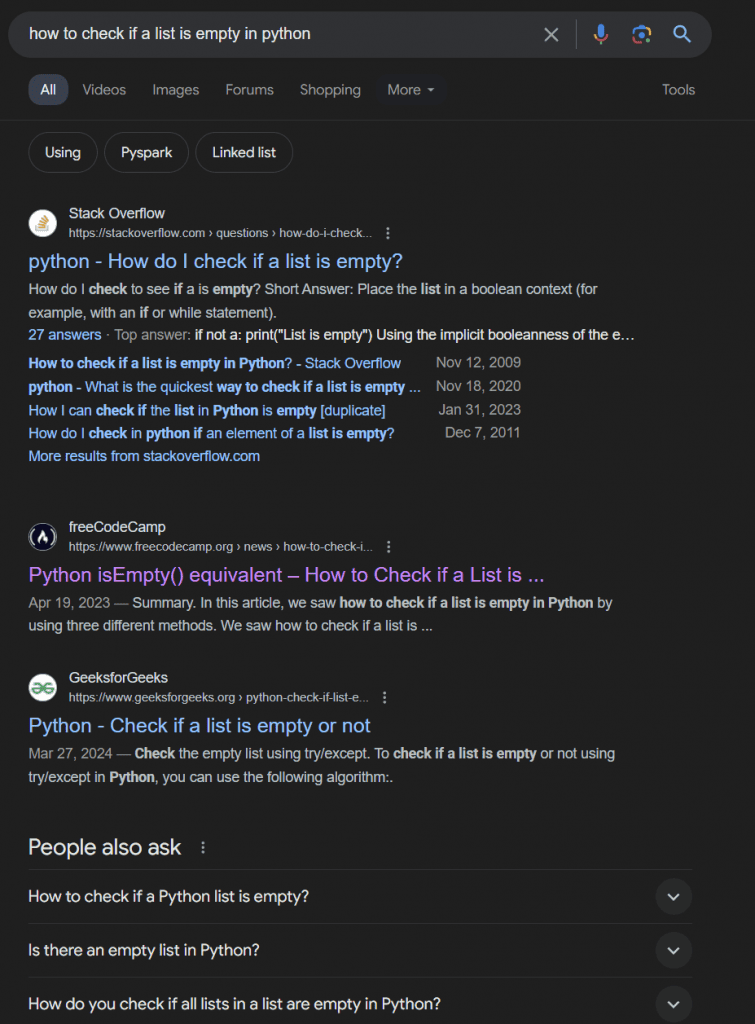

Reviewing “How to Check If a List is Empty in Python” Again

One of the articles I wrote back in 2018 was called How to Check If a List is Empty in Python. At the time in 2020, it was ranked second for the search phrase, “how to check if a list is empty in python.” Today, it’s ranked 72nd. Let’s take a look at the competition:

Unlike in that past, you can see there is no snippet for this search term anymore. Instead, Google actually looks somewhat like what you’d expect: a list of search results. Unfortunately, the results aren’t great.

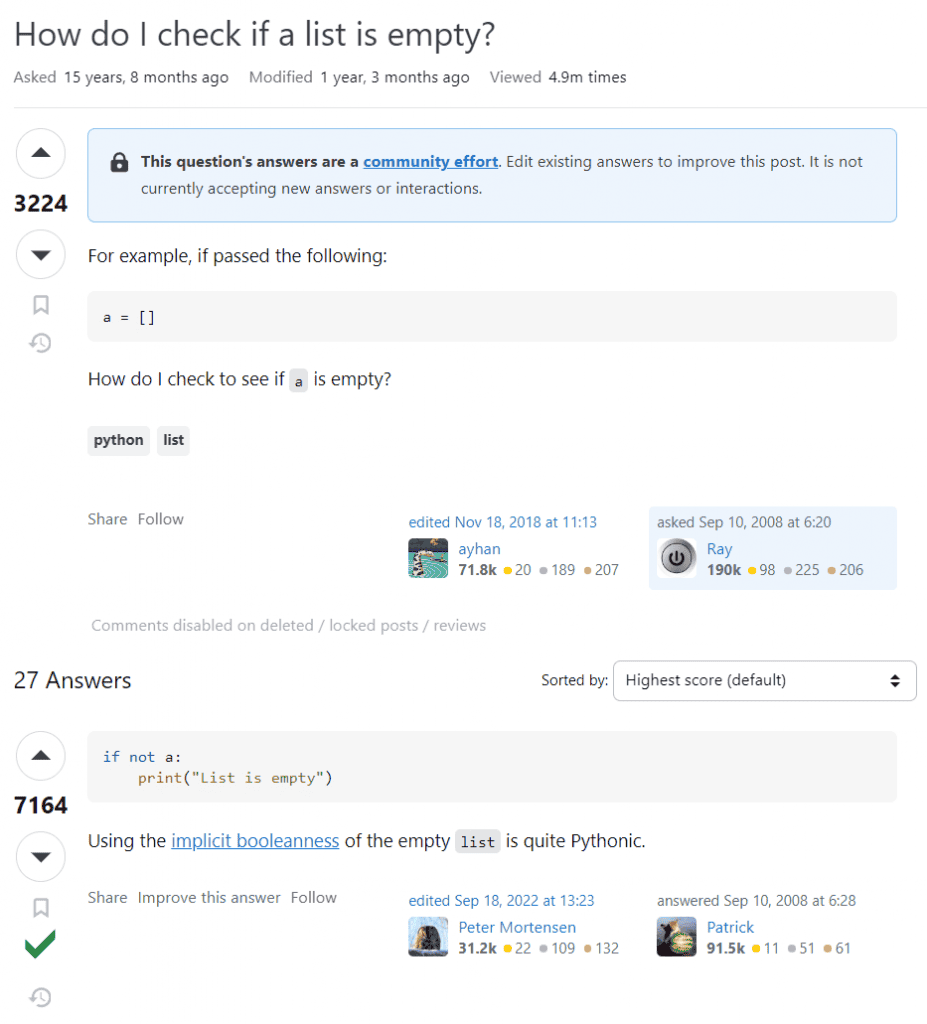

Rank 1: Stack Overflow

At the top is the same site I attempted to dethrone many years ago, StackOverflow. For the record, the content is largely the same:

I can’t really be too upset about this because Stack Overflow does tend to have good information on it. However, I still wish it wasn’t so popular, as the culture of the platform is very toxic.

Rank 2: freeCodeCamp

At rank 2 is a relatively new article from an independent author on freeCodeCamp. The content itself is nearly identical to my article in terms of solutions provided, albeit in a different order, with different wording, and with different examples. It’s also significantly more streamlined, which I can understand if all you want is a solution and no explanation.

The only thing that is kind of weird is the title: “Python isEmpty() equivalent – How to Check if a List is Empty in Python.” Nowhere in the article is “isEmpty()” ever mentioned. I would expect with a title like this that the content would be trying to get you to connect your current knowledge to some new knowledge, but the article never makes that connection explicit.

Overall, though, I can’t really complain. The article seems fine.

Rank 3: GeeksForGeeks

At rank 3 is our old friend GeeksForGeeks, which I can’t stand. Last time, I think they were at rank 4, so they somehow gained a position. I don’t recall what the page used to look like, though I suppose I could check the web archive. That said, I was somewhat pleased with the content this time around. There’s at least an example of the problem and a host of solutions that go far beyond the three I listed (though, some of them are truly absurd). The code samples are also pretty strange, with no regard for naming conventions or software design principles. Just take a look at this:

def Enquiry(lis1):

if not lis1:

return 1

else:

return 0

# Driver Code

lis1 = []

if Enquiry(lis1):

print("The list is Empty")

else:

print("The list is not empty")

What is the point of the Enquiry function? Why make use of type flexibility with integers to show type flexibility of lists? I’m so confused. With the way content always seems to look on GeeksForGeeks, I would not be surprised if this was generated by ChatGPT.

Rank 4+: Everyone Else

Anyway, following rank 3, there’s actually a huge gap in the search results. At that point, Google provides a feature titled “people also ask,” which is quite fun to explore if you’re bored. Then, under that is a small section titled “discussions and forums” listing off Stack Overflow again, alongside Reddit and Quora.

From there, there are a series of new sites plus the other previous suspects:

- Programiz (173 words): shows the same three solutions

- Flexiple (454 words): shows five solutions but three of them are duplicates

- Tutorials Point (974 words): I previously complained about this one, but it’s much more thorough now

- IOFLOOD (1994 words): a bit more interesting and covers more cases

As I complained previously, most of these articles are quite short and are more or less copies of each other. Worse still, a lot of them look like the they straight up ripped my code. Of course, that’s hard to prove, but many of them use the same variable names and even the same comments, though sometimes as print strings.

With that said, I am slightly more happy with the pages I trashed in the past. It seems that there’s just a lot more garbage now.

Also, I’ll say that I went back and reviewed my article and noticed some artifacts in the text from when I used to use a different inline highlighting plugin (e.g., text that looked like this: `run()`python). But if that’s the only reason I’m sitting at rank 72, that’s pretty absurd. I suppose I’ll check back in a few weeks and see if anything has changed.

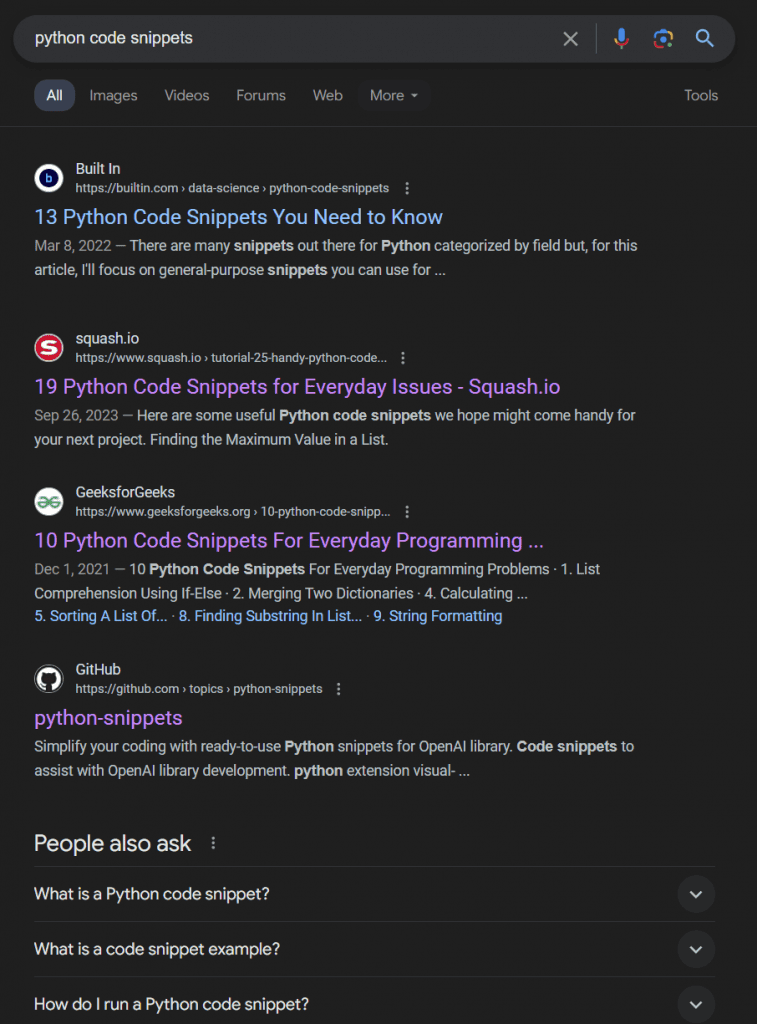

Reviewing “Python Code Snippets” Again

When I think about my 100 Python Code Snippets for Everyday Problems article, I think of it as one of the few examples of what I’d call an “ethical listicle.” Usually, at least in my experience, listicles are low quality bait posts that were popularized by sites like BuzzFeed. The whole premise is that they’re meant to be relatable but ultimately clickbait, so you can get that sweet, sweet ad revenue.

In contrast, my article doesn’t just list a bunch of code snippets but actually backs each of them with another article which describes them in more detail. The content itself is organized by data structure, so the snippets themselves are organized in groups. I don’t think you can really make a list post more informative.

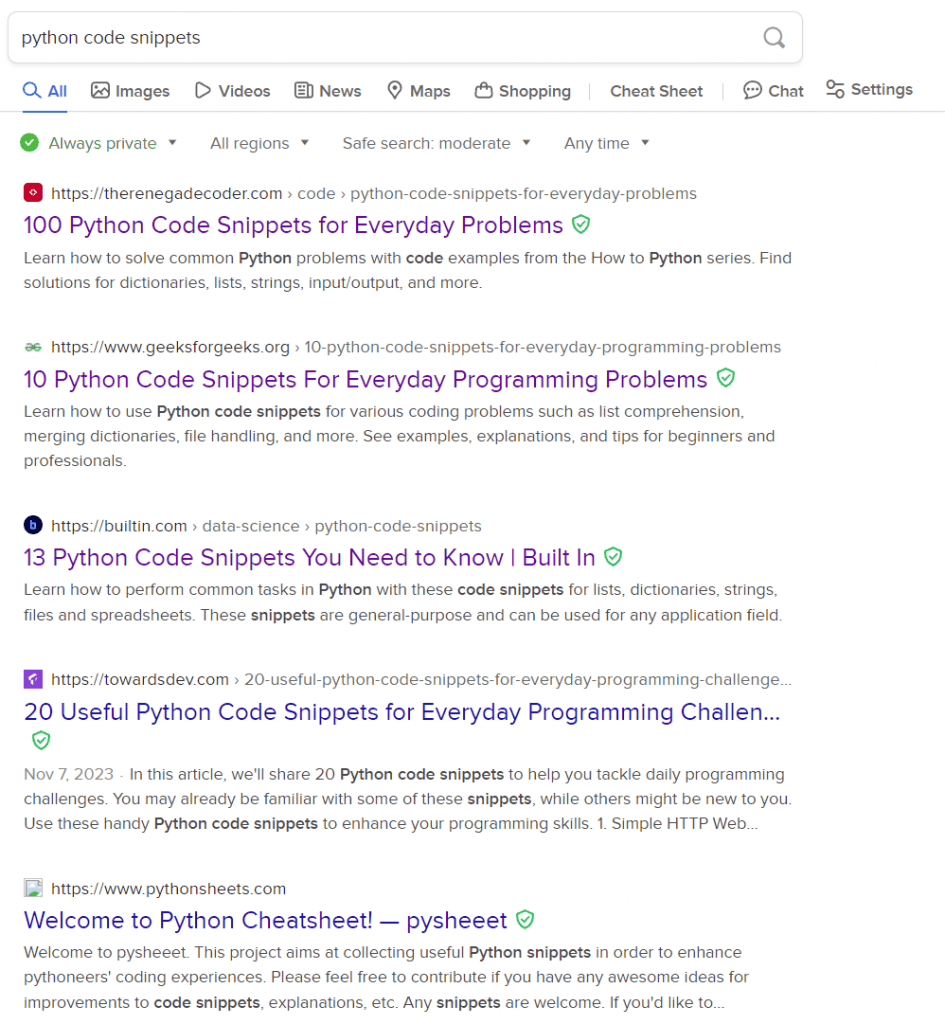

To me, that should put my article near the top in terms of rank, but that’s unfortunately not what I’ve found. Instead, it’s sitting at rank 27. Here’s the competition:

At a glance, I don’t really see any of the old articles ranking in the top anymore—not just mine. When comparing the new titles with the old title, I would argue that the results are roughly the same (i.e., a mix of list posts). So, let’s see how the content differs!

Rank 1: BuiltIn

Up top, we have an article titled “13 Python Code Snippets You Need to Know,” which seems to have come on the seen on March 8th 2022. With a quick peek, I notice that the structure of the article is pretty similar to mine in that it organizes the solutions by data structure (e.g., lists, dicts, strings, etc.).

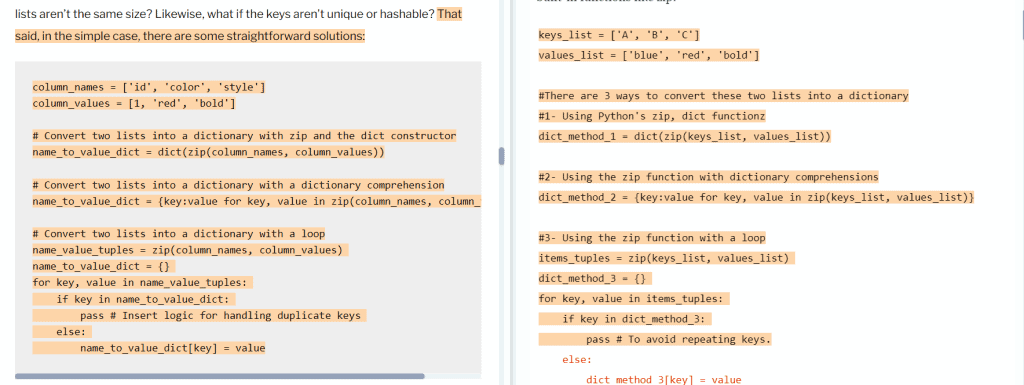

Out of curiosity, I tried comparing my article to theirs with a plagiarism detection tool and found that there was really only 3.6% plagiarism. However, the sections that were plagiarized were a bit egregious. For example, the code snippet I use to show how to combine two lists into a dictionary is almost copied verbatim:

and found that there was really only 3.6% plagiarism. However, the sections that were plagiarized were a bit egregious. For example, the code snippet I use to show how to combine two lists into a dictionary is almost copied verbatim:

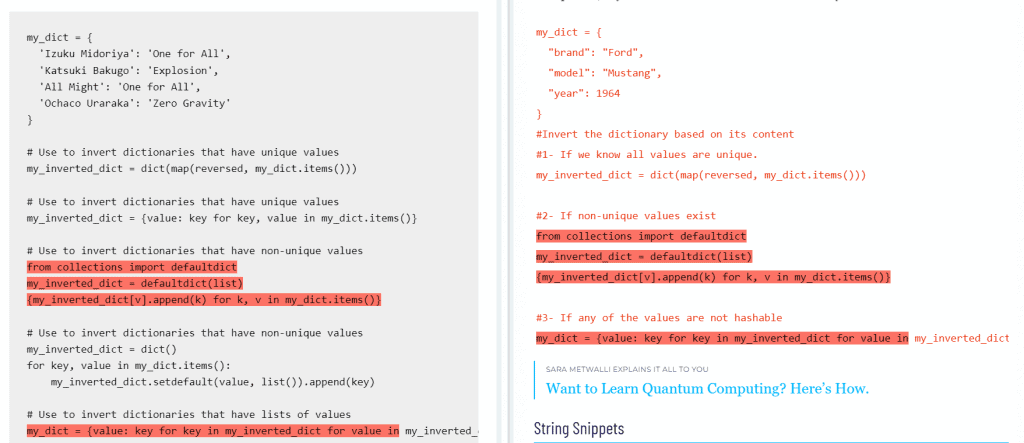

And, those are the paraphrased solutions. There are several that are ripped directly. For example, two of my dictionary inversion solutions are ripped verbatim:

Out of curiosity, I thought to even search for the variable name “my_inverted_dict ,” since I figured it was unique enough. And sure enough, three of my articles show up from as early as December 4th, 2017. In hilarious fashion, my solution is used in a Stack Overflow answer

,” since I figured it was unique enough. And sure enough, three of my articles show up from as early as December 4th, 2017. In hilarious fashion, my solution is used in a Stack Overflow answer almost two years later. It’s also copied in a bunch of other places, which caused me to stop looking lest I get very sad.

almost two years later. It’s also copied in a bunch of other places, which caused me to stop looking lest I get very sad.

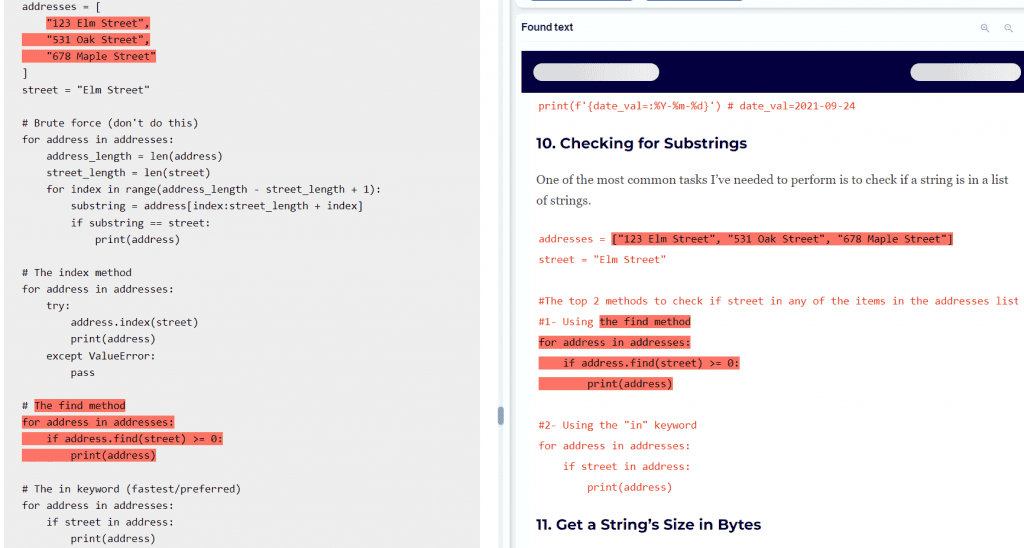

But wait! There’s more. My personal favorite stolen set of code snippets comes from my goofy search engine implementation (meta, I know) where the author just straight up copied the example list:

I’m not even certain they understood what they copied because it’s very easy to check if a string is in a list, like they claim they’re doing. What these solutions do is check if a substring exists within a string in a list. I also find it funny that the plagiarism tool I used didn’t quite catch how much of the two code sets were actually identical, so I assume the 3.6% is a conservative estimate.

If you’re bored, I’d recommend comparing the two articles. There are some other funny copies. For instance, my entire parsing spreadsheet solution is copied.

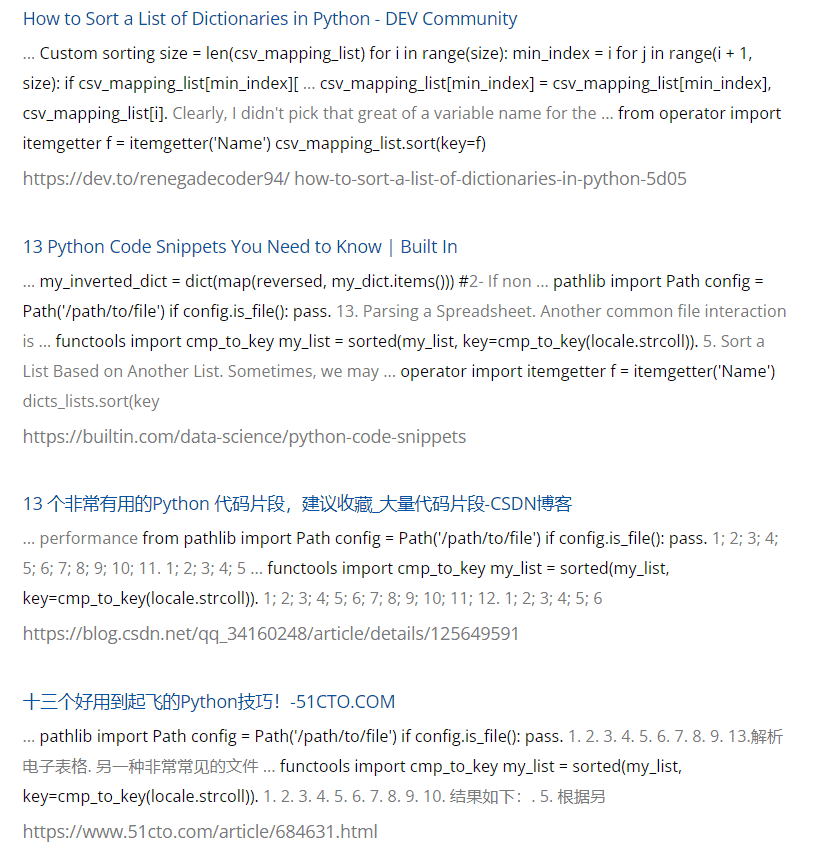

This did, of course, lead me down a rabbit hole of trying to detect plagiarism on articles on my site. According to Neil’s post, you can do this easily by dropping your article URL into copyscape . If you do that with my article, look who shows up:

. If you do that with my article, look who shows up:

So, the top ranking result on Google for the keyword “python code snippets” is an article that directly plagiarizes from my article, which ranks at an abysmal 27th. I have to wonder how much more of their article is plagiarized.

Rank 2: Squash Labs

At a glance, the squash labs article looks fine. It’s definitely one of the longer articles, and it has a nice navigational sidebar. However, it did not take me long to discover that the code snippets and explanations had bugs.

For example, the very first solution claims that the max() function can be used to find the max item in a list. This is true, though “max” probably needs to be defined with respect to whatever data type you’re using—which they cover, kinda. Specifically, they show two code snippets: one for integers and one for strings. However, the string solution is just wrong. Take a look:

fruits = ['apple', 'banana', 'cherry', 'date'] max_fruit = max(fruits) print(max_fruit) # Output: cherry

In the solution above (if you haven’t caught it yet), they claim that “cherry” is the last word alphabetically in the list, and it just isn’t. max_fruit stores “date” because “d” comes after “c” alphabetically (which is actually somewhat irrelevant anyway but not the point).

Now, in general, I’ve received complaints for having bad code in my solutions, and it’s mostly due to laziness. I get a solution working, make some minor changes, and copy over the lines without testing everything again. It happens. So, I will cut them a break, especially since I didn’t notice any other bugs in their solutions.

I will say that they make a lot of claims in their writing without any sources or justifications. Again, I don’t think that matters, but saying something like “membership tests for sets are faster than for lists” should probably be substantiated or demonstrated. It’s part of the reason why my articles include performance tests. Personally, I don’t believe their claim if the first step involves converting the list to a set just to do the membership test.

At any rate, before moving on, I did try to do some plagiarism detection. I kind of figured there wouldn’t be any, and there wasn’t—at least when compared to my site directly. However, copyscape connected their article to a variety of sources, suggesting to me that maybe the article was written with the help of generative AI. I have a hard time believing somebody would go through this much effort to integrate so many sources, but I suppose if James Somerton can do it …

…

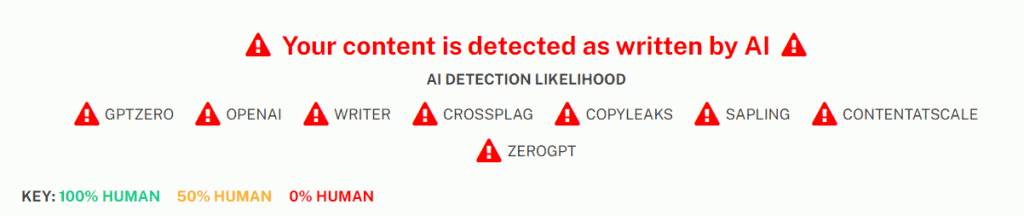

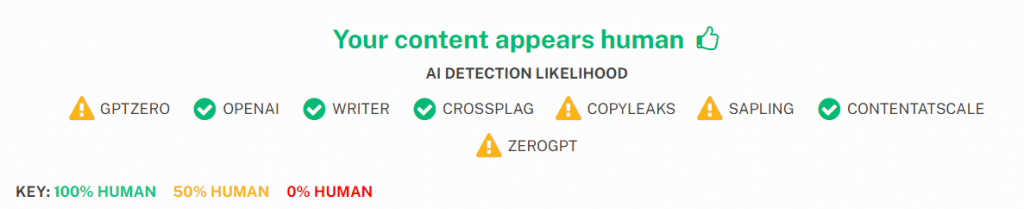

Because my brain is rotted, I did try passing some of the article through an AI detector . I don’t totally trust AI tools for detecting generative AI, but every single model came back as likely AI:

. I don’t totally trust AI tools for detecting generative AI, but every single model came back as likely AI:

Whereas, my article, which was written before these tools became mainstream, comes back as “appearing human.”

Last thing I’ll comment on is the weird URL. While I was going down the rabbit hole, I noticed the URL reads “25 handy python code snippets for everyday issues” while the article is titled “19 python code snippets for everyday issues.” That’s weird, isn’t it?

Rank 3: GeeksForGeeks

In third place for this keyword as well is our old friend, GeeksForGeeks. Again, there’s not much to say. I skimmed their article, and it looked pretty decent. None of the solutions seemed wrong, nor were they weird like the other article. I even did the same plagiarism checking, and I didn’t really find anything. So overall, I was fairly pleased with it.

In general, I don’t really like GeeksForGeeks. They have a content management problem, where not everything is the same quality. That said, I don’t have much to complain about here. I would be happy with it ranking above the other two articles so far.

Rank 4+: Everyone Else

If you keep going down the list, you’ll see a lot of the same kinds of content plus some weird stuff, like the topic page for Python Snippets on GitHub . It seems weird to index that given it’s basically an index page, but Google doesn’t seem to mind. There’s also some advertising-style content in the “Pieces for Developers” link.

. It seems weird to index that given it’s basically an index page, but Google doesn’t seem to mind. There’s also some advertising-style content in the “Pieces for Developers” link.

Later down the list, there are your usual Q&A style links, like a Reddit thread on how to save code snippets, and a User Support Hub thread on how to use code snippets in Jupyter Notebooks. Again, I’m not sure how those are more relevant than my article, but we let Google do its thing.

If you keep scrolling, you’ll start to see a few more list posts and then you get into some documentation. Then, Google sneaks in a few more ads, and suddenly the results are completely unrelated. That’s not a great user experience.

Contextualizing the Problem

In order to really claim that Google sucks at ranking good content, it’s important not just to look at how it’s changed over time. It’s also important to look at how the results compare to other search engines.

There are many search engines to choose from, but by far the one that drives the most traffic for me beyond Google is DuckDuckGo. As a result, I decided to take a peek at the search results for the same keywords. What I found is almost comical.

To start, here are the results for the keyword “python code snippets” on DuckDuckGo:

What Google thinks is 27th place, DuckDuckGo places at the top of search. Sure, the plagiarized article is 3rd, but I’ll take that over outperforming the original.

As for the “How to Check If a List is Empty in Python” query, here’s what we get:

My article doesn’t show up until rank 15, but that’s miles better than 72. Otherwise, the results are pretty similar. Tutorials Point luckily doesn’t show up until after my article, so I’m happy about that.

I will also say that basically every result seems to be relevant, even if I don’t agree that the quality of the content matches the ranking. That alone makes me feel a lot better about it as a search engine.

Just for fun, I also looked at the same queries on other search engines, and here were my respective rankings:

| Keyword | Google Rank | DuckDuckGo Rank | Bing Rank | Yahoo |

|---|---|---|---|---|

| Python Code Snippets | 27 | 1 | 1 | 1 |

| How to Check If a List is Empty in Python | 72 | 15 | 15 | 13 |

In other words, on average, my articles just perform better on basically every other search engine. I’m not sure what that says about those other search engines, but it tells me that Google isn’t working for me. Maybe some day I’ll figure out why.

Key Takeaways

Having reviewed Google’s search results for a couple of my old articles, I got to thinking a bit more deeply about what exactly is wrong with Google. Here are a few of my key takeaways:

- Google does not care about plagiarism: this one should be obvious. After all, I wrote a whole article about their new generative AI feature, which is essentially stealing with extra steps. Between that and plagiarized material outranking the original, it’s hard to know what to trust in the search results.

- Google doesn’t handle content syndication well: I have reposted my articles in a few different places on the internet to try to expand my reach. Unfortunately, that has resulted in the copies outranking the originals in search.

- Google doesn’t handle mimicry well: it seems common for content farm operations to see a popular article in search results and copy its title basically verbatim. I assume this is because the title conveys a lot of information related to the search phrase, but that just results in what looks like (and mostly is) a bunch of slop in the search results. Ultimately, it makes genuine content look bad in comparison, like we’re hopping on a trend or something.

- Google does not care about accurate information: this is a criticism Google has gotten for a long time, especially with regards to medical advice. However, I think it’s probably an issue across the board. It did not take me long to find information that was just wrong.

- Google does not care about relevance: this is a common complaint you’ll see people make now about how Google works. You’ll search for something, and you’ll get links to things that are tangentially related. So you end up iteratively refining your search, hoping to find anything of value. That’s definitely not ideal for a search engine whose primary purpose is to provide relevant results.

The last thing I’ll say is that I never want to turn this into some weird witch hunt where people go out and shame other writers, even if they plagiarize or use tools like ChatGPT. Instead, I want to be critical of the systems that incentivize this type of behavior. If Google was doing its due diligence as a search engine, people would not see the benefit of content farming, plagiarism, or generative AI as they would not produce desirable results on the search engine.

In general, I think we give these companies too much of a break when we run defense for them by saying things like: “it’s really hard to put together a good search algorithm to combat these issues.” Even sites that report on the horrible state of Google search seemingly place the blame on spam sites and not the search engine itself .

.

All of that is to say that I’ve started using DuckDuckGo. While I haven’t used it long enough to really have a preference, I can’t in good conscience continue using Google.

With that said, let’s call it a day here! I’ve probably spent too much time on this topic anyhow. As always, if you liked this article, there are more like it:

- Google Threatens to Ruin Search as We Know It

- AT&T Is a Disaster: The Offer That Never Came to Be

- 11 Reasons Why I Quit My Engineering Career

Likewise, you can take the extra step to support the site by checking out this list. Otherwise, take care!

Recent Posts

It's July 2024, and I have three chapters of my dissertation drafted! Two more and we'll be ready to defend.

In growing the Python concept map, I thought I'd take today to cover the concept of special methods as their called in the documentation. However, you may have heard them called magic methods or even...