As you begin your Python journey, I figured it might be a good time to clue you in on the basics of computing through the lens of history. In this article, we’ll talk about where computers come from, why we use binary, and how Python fits into all this.

As a bit of a warning, this is a very different style of content than I normally write. I’m not much of a history person, but I think it’s important to have context around the things you’re about to learn. In other words, I’m jumping on the “why is programming like this?” questions early! Let me know if you like it.

Table of Contents

- A Brief History of Computing

- An Introduction to Logic

- From Switches to Binary

- Introducing Volatile Memory

- Synchronizing Circuitry

- From Hardware to Software

- The State of Python in 2020

- A Word to the Wise

A Brief History of Computing

As someone who’s rather young—26 at the time of writing—I haven’t really lived through the history of computing. In fact, I’m not sure I could even consider myself an expert on the history of the internet. That said, I do know a little bit about how we got here, so I figured I’d share what I know.

In order to talk about the history of computing, we actually don’t have to go back too far. After all, the first modern computer—the kind that supported a graphical user interface and a mouse—didn’t arrive until the 1960s. Before that, computers were typically rather niche and could only be used through a plugboard (e.g. the ENIAC ) or punch cards (e.g. the IBM 029

) or punch cards (e.g. the IBM 029 ).

).

It wasn’t until the 1970s that modern programming really took off. Even in that time, a lot of machines were designed exclusively with hardware. For instance, Nolan Bushnell built his entire empire (Atari & Chuck E. Cheese) on hardware-based video games—no software required .

.

Of course, programming languages like COBOL and FORTRAN paved the way for software development in the 1950s. By the 1970s, high-level languages like C and Pascal made programming more accessible. Today, there are allegedly over 700 programming languages . Though, pretty much anyone can make their own nowadays.

. Though, pretty much anyone can make their own nowadays.

An Introduction to Logic

While the history of computing is interesting, we haven’t really taken the opportunity to talk about how computers actually work. After all, it’s cool that we were able to develop computers, but how did that technology become possible?

The invention that ultimately allowed us to create the computer was the transistor in 1947. Prior to that invention, the closest we could have gotten to a computer would have been some sort of mechanical beast full of vacuum tubes: think steampunk.

What made the transistor so revolutionary was that it allowed us to create tiny logic circuits. In other words, we could suddenly build circuits that could do simple computations.

After all, the magic of a transistor is that it’s a tiny switch. In other words, we can turn it ON and OFF. Oddly enough, this switch property is what allowed us to build logic circuits.

With enough transistors, we were able to build what are known as logic gates. At their most basic, a logic gate is a digital (i.e. consisting of a collection of ON/OFF states) circuit with at least one input and one output. For example, a NOT gate would take an input and invert it. If the input is ON, the output would be OFF and vice-versa.

While flipping current ON and OFF is cool, we can do better than that. In fact, transistors can be assembled into more interesting logic gates including AND, OR, NAND, NOR, and XOR. In the simple case, each of these gates accept two inputs for comparison. For example, an AND gate works as follows:

| Input A | Input B | Output |

|---|---|---|

| ON | ON | ON |

| ON | OFF | OFF |

| OFF | ON | OFF |

| OFF | OFF | OFF |

In other words, the only way we get any current on the output is if both the inputs are ON. Naturally, this behavior is just like the English word “and”. For instance, I might say that if it’s cold outside AND raining, then I’m not going to work. In this example, both conditions have to be true for me to skip work.

As you can imagine, each of the remaining gates described have a similar behavior. For example, the output of a NAND (i.e. NOT-AND) gate is always ON unless both inputs are ON. Likewise, the output of an OR gate is ON if any of the inputs are ON. And, so on.

What’s interesting about these logic gates is that we can now use them to build up even more interesting circuits. For example, we could feed the output of two AND gates into an OR gate to simulate a more complicated sequence of logic:

If it’s raining AND cold outside, OR if it’s windy AND dark outside, I will not go to work.

Suddenly, we can create much more complicated circuits. In fact, there’s nothing stopping us from building up a circuit to do some arithmetic for us. However, to do that, we need to rethink the way we treat ON and OFF.

From Switches to Binary

One of the cool things about these transistor circuits is that we can now represent numbers. Unfortunately, these numbers aren’t like the ones we use in our day-to-day. Instead, we use a system known as decimal, and it includes the digits 0 to 9.

On the other hand, computers only understand two things: ON and OFF. As a result, a computer’s number system can only take two states: 0 for OFF and 1 for ON. In the world of numbers, this is known as binary where each 0 or 1 is known as a bit.

Of course, being able to count to one isn’t all that interesting. After all, the average person has at least ten fingers they can use for counting. How could a computer possibly compete?

Interestingly, all we have to do to get a computer to count higher than one is to include more bits. If one bit can represent 0 and 1, then surely two bits could represent 0, 1, 2, and 3:

| Output A | Output B | Decimal Equivalent |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 2 |

| 1 | 1 | 3 |

Each time we add a bit we double the amount of numbers we can create. For instance, one bit gives us two possible values. Meanwhile, two bits gives us four possible values. If we were to add another bit, we’d have eight possible values. Naturally, this trend continues forever.

Now that we can begin to interpret ON/OFF signals as numbers, we can start building arithmetic circuits like adders. For instance, we can add two inputs using an XOR (i.e. Exclusive OR) gate and an AND gate. The XOR gate gives us the sum, and the AND gate gives us the carry:

| Input A | Input B | Carry (AND) | Sum (XOR) |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 1 |

| 1 | 0 | 0 | 1 |

| 1 | 1 | 1 | 0 |

This type of circuit is known as a half adder, and it allows us to add any two inputs together and get one of three possible outputs: 0, 1, or 2.

To no one’s surprise at this point, we can take the half adder circuit a step further and begin constructing larger arithmetic circuits. For instance, if we combine two half adders, we can create a full adder which includes an additional input for carries:

| Input A | Input B | Input Carry | Output Carry | Sum |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 1 | 0 | 1 |

| 0 | 1 | 0 | 0 | 1 |

| 0 | 1 | 1 | 1 | 0 |

| 1 | 0 | 0 | 0 | 1 |

| 1 | 0 | 1 | 1 | 0 |

| 1 | 1 | 0 | 1 | 0 |

| 1 | 1 | 1 | 1 | 1 |

Now, a full adder is interesting because it allows us to treat it as a single unit. In other words, we can easily string a series of full adders together to add much larger numbers. To do that, we just need to take the output carry of one adder and tie it to the input carry of the next adder.

With this type of innovation, we can now perform considerably more complex arithmetic and logic. But wait, it gets better!

Introducing Volatile Memory

When people imagine computers and programming, they tend to imagine a lot of math and logic, and they’re not wrong! However, there’s another incredibly important component: memory.

Being able to store information for retrieval later is the entire foundation of modern computing. Unfortunately, up until transistors came along, storing information was hard. In general, information was often hard coded in circuitry, loaded onto magnetic tape, or held in vacuum tubes—none of which were ideal.

Then, the transistor came along which allowed some clever folks to come up with a way to store memory in them. For instance, it’s possible to assemble two NOR gates in such a way that they can store a single bit. This is known as a SET-RESET Latch (i.e. an SR Latch).

To do this we would have to take the output of one NOR gate and tie it to the input of the other NOR gate and vice-versa. Ultimately, this leaves us with two untouched inputs—one on each NOR gate—known as SET and RESET. In terms of outputs, we have Q and !Q (NOT-Q). Q is the output of RESET’s NOR gate, and !Q is the output of SET’s NOR gate.

As long as both SET and RESET are OFF, the output maintains its previous state. This is because Q and !Q are are tied to the opposite inputs. In other words, if Q is ON, !Q has to be OFF. As a result, the circuit is “latched”—Q will stay ON indefinitely. To turn Q OFF, RESET must be turned ON. At that point, the circuit latches, and Q stays OFF indefinitely:

| SET | RESET | Q | !Q |

|---|---|---|---|

| 0 | 0 | LATCH | LATCH |

| 0 | 1 | 0 | 1 |

| 1 | 0 | 1 | 0 |

| 1 | 1 | ??? | ??? |

If your head is spinning, don’t worry! I find this kind of circuit very confusing. Especially, if you consider that there must be some sort of race condition. For example, what if we turn both SET and RESET on at the same time? Likewise, if the circuit relies on previous state, what state will it be in when it’s first turned on?

All confusion aside, this is a very important circuit because it allows us to store information. For example, we could build a nice 4-bit adder using our example from before. Then, we could store the result in a set of latches. For fun, we could run the result back into our 4-bit adder as some form of accumulator. Now that’s cool! But wait, there’s more!

Synchronizing Circuitry

As mentioned with the SR Latch, one of the challenges with building logic circuits is timing. After all, an AND gate only works because we wait for the signals that pass through it to stabilize. In other words, it takes time (albeit a very small amount of time) for an electric signal to pass from input to output.

Unfortunately, this propagation delay is non-negligible. In fact, as a circuit grows, there are certain tolerances that we have to work around. If a signal doesn’t get where it needs to go in time, we can end up with improper results.

One way to deal with this issue is to synchronize our computations. In other words, we could pick a maximum amount of time it would take all signals to get where they need to go. When that time arrives, the signals would move on to the next computation. In the real world, that might look like this:

I have a group of friends who want to go camping (no joke—they bring it up constantly). Each of us is traveling from a different location. Specifically, I live in Ohio, and my friends are spread around in places like Pennsylvania, Wisconsin, and Florida. Ultimately, we want to camp in New York.

Rather than having everyone get there when they get there, we’d prefer that we all get there at the same time. As a result, we try to figure out who has the longest trip planned. For example, my friend from Wisconsin would probably fly while my friend from Florida would probably drive.

Since we know it takes my Floridian friend around 30 hours to drive to New York, we decide to plan around that. In other words, we agree not to begin camping until 30 hours from now. It’s possible the Floridian friend doesn’t get there in time, but we’ve agreed on a time.

While a bit overdone, this is basically how synchronization works in computing. Rather than let signals run wild, we try to determine which circuits need the most time to propagate their signals. Then, we agree to wait a set time before moving on to the next computation.

This type of synchronization is repetitive. In other words, the entire system can be built around a clock which only allows new computations every 50 milliseconds (though, usually in the range of micro/nanoseconds). That way, we don’t run into any issues where calculations are corrupted by race conditions.

With this type of clock technology, we were able to really up our game in terms of logic. In fact, this is the last piece we needed to begin building modern computers.

From Hardware to Software

Between the 1940s and 1960s, computing technology was developing rapidly. For example, a lot of the transistor-based technology I’ve mentioned up to this point had already existed in one form or another through vacuum tubes or relays. However, transistors allowed for a much smaller and cost effective design which gave way to the microprocessor.

Prior to that point, programming looked a bit messy. Coding was done through the hard wiring of circuits, the toggling of switches, and the reading of magnetic tape. The few programming languages that did exist (FORTRAN & COBOL) weren’t actually typed onto computers but rather punched into paper cards.

According to Dr. Herong Yang , these sort of cards had a lot of requirements including the fact that each card could only store one statement. That said, even with this information, I’m not sure how to read this card.

, these sort of cards had a lot of requirements including the fact that each card could only store one statement. That said, even with this information, I’m not sure how to read this card.

Fortunately, with the invention of the microprocessor in the early 1970s, we were able to pivot to the types of computers we use today—albeit massively simplified. In fact, computers at the time often consisted of nothing more than a terminal.

Once programming as we know it today arrived on the scene, we hit the Renaissance of software development. I mean look at some of these creations:

- Operating systems (e.g. Unix, MS-DOS)

- Text editors (e.g. Vim, Emacs)

- Version control (e.g. RCS, CVS, Git)

- The internet

- Social media (e.g. MySpace, Facebook, Twitter)

- Mobile phones (e.g. iPhone, Android)

- Video games

- Special effects

- Image processing

- GPS

- And, many more!

At this point, you’re probably wondering how Python fits into the world of computing. Don’t worry! We’ll chat about that next.

The State of Python in 2020

I wouldn’t be doing you justice if I didn’t contextualize Python in all this madness. As a matter of fact, Python (1990) didn’t appear on the scene until a few years before I was born (1994). To me, this makes Python seem a bit old—especially considering how young the field is.

Of course, as I’ve found, certain things in the world of development have a tendency to stick around. For instance, remember when I mentioned COBOL, that language from the 1950s? Yeah, that’s still very much around. Likewise, languages from the 1970s like C are also very much around.

Somehow, programming languages tend to stand the test of time, but the hardware they live on almost never outlive a decade. For instance, remember the Zune? I almost don’t! And, it was developed in 2006. Meanwhile, languages like C, Python, Java, and PHP are all still dominating the world of development.

With all that said, is there any worry that a language like Python could go extinct? At the moment, I’d say absolutely not! In fact, Python is rapidly becoming the laymen’s programming language. And, I don’t mean that in a bad way; the language is just really palatable to new learners. Why else do you think it continues to grow in 2020 ? Hell, Toptal has an entire hiring guide

? Hell, Toptal has an entire hiring guide to help companies select the best Python developers, so I definitely expect it to be in demand for awhile.

to help companies select the best Python developers, so I definitely expect it to be in demand for awhile.

As I mentioned several times already in this series, I streamed myself coding in Python for under an hour, and it got one of my friends hooked. If a language can attract someone just by seeing a few examples, I think it’s safe to say it’s here to stay.

That said, there are definitely other reasons people are gravitating to the language. For instance, Python is really popular in data science and machine learning thanks to tools like Pandas and PyTorch, respectively. Certainly other languages have started to cater to those fields as well, but folks in those fields aren’t necessarily computer scientists: they’re mathematicians. As a result, I imagine they prefer tools that don’t require them to have a deep understanding of software.

Overall, I expect Python to continue trending up for a while. After all, the development team does a great job of improving the language over time. In my experience, they do a much better job of catering to the community than some of the other popular languages (*cough* Java *cough*). That’s reason enough for me to continue to promote it.

*cough*). That’s reason enough for me to continue to promote it.

A Word to the Wise

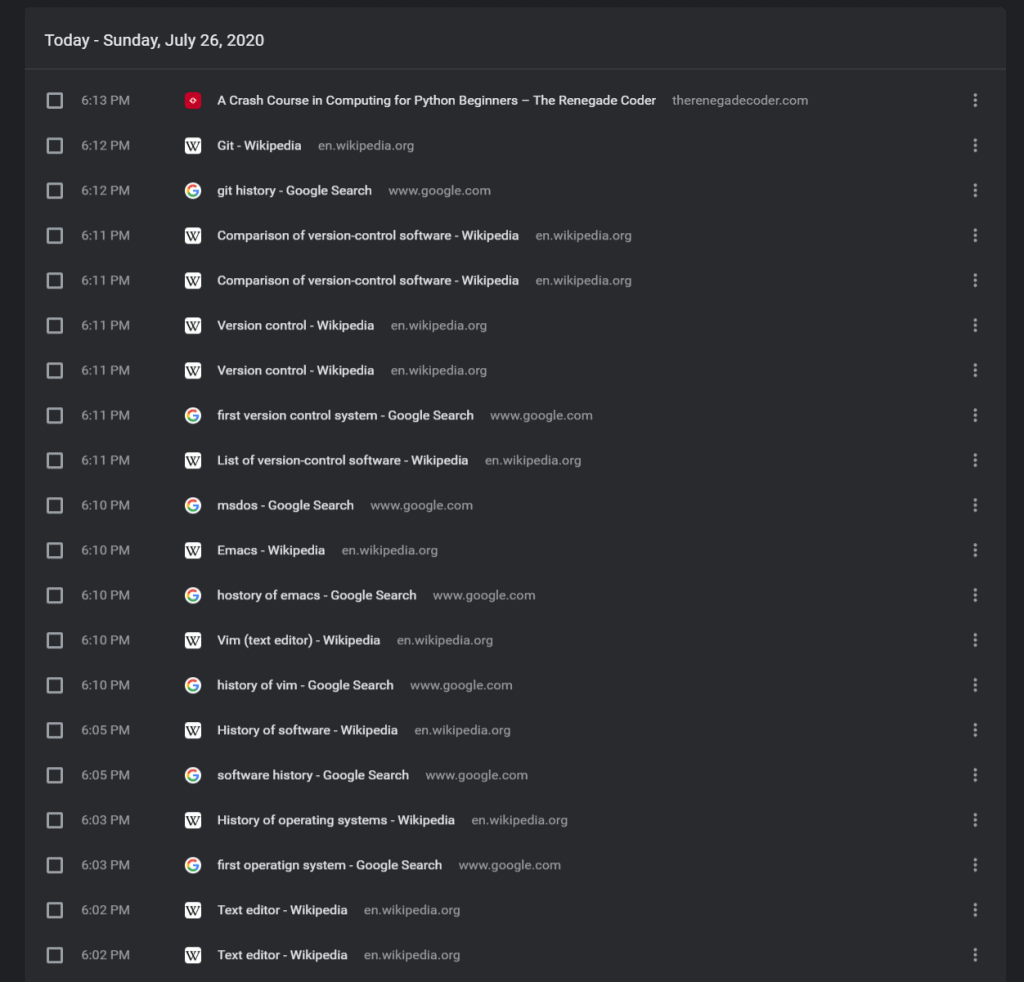

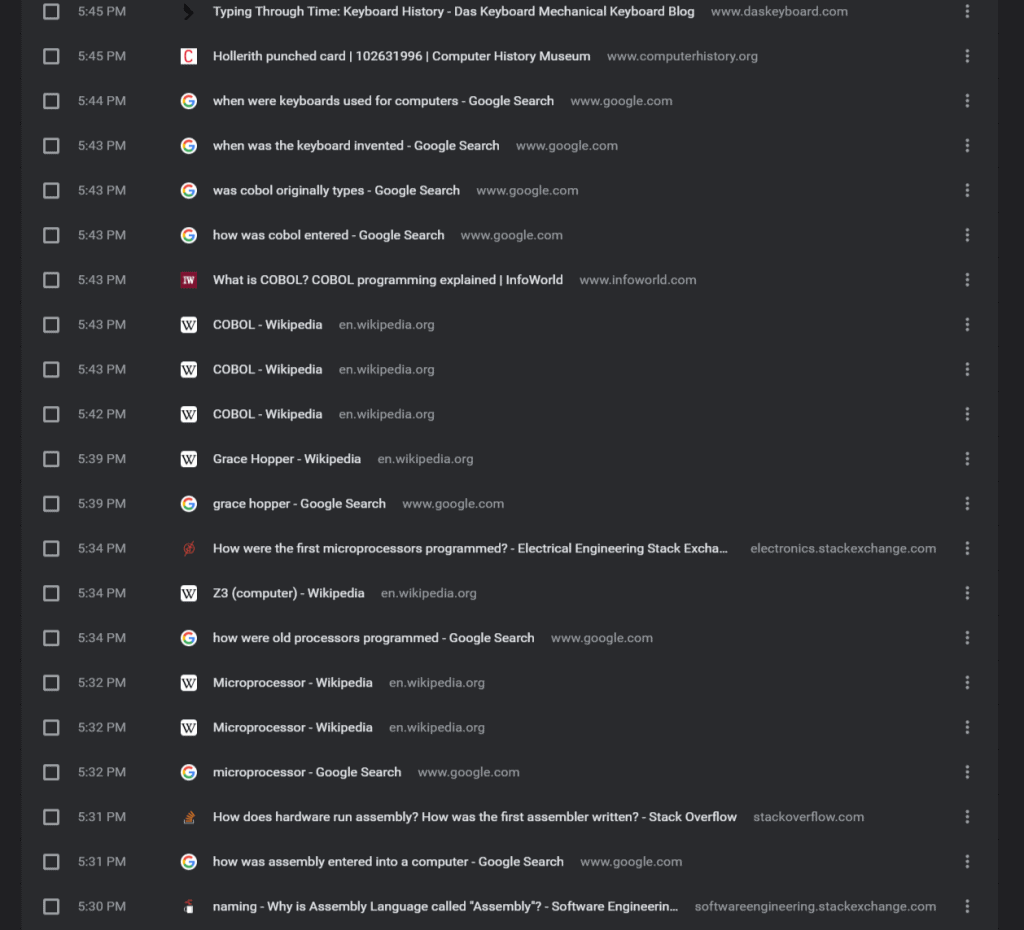

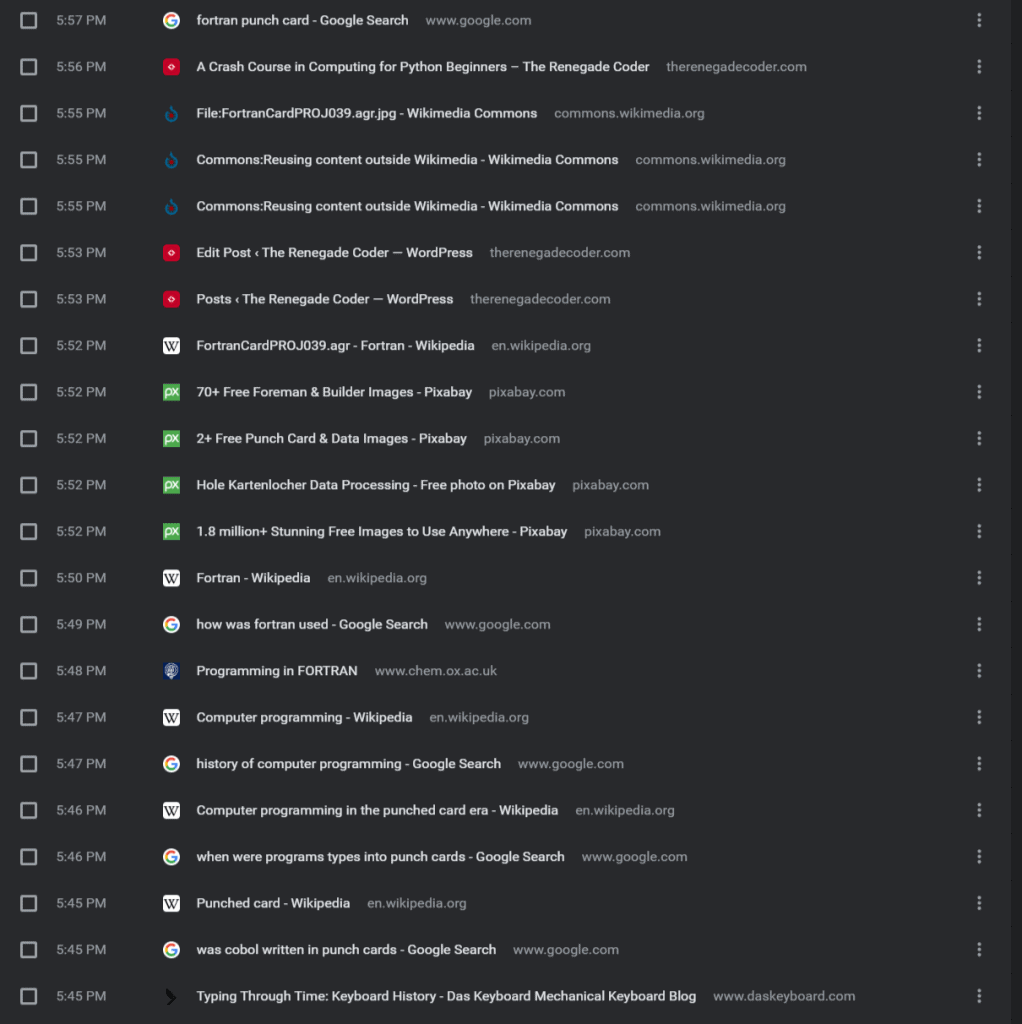

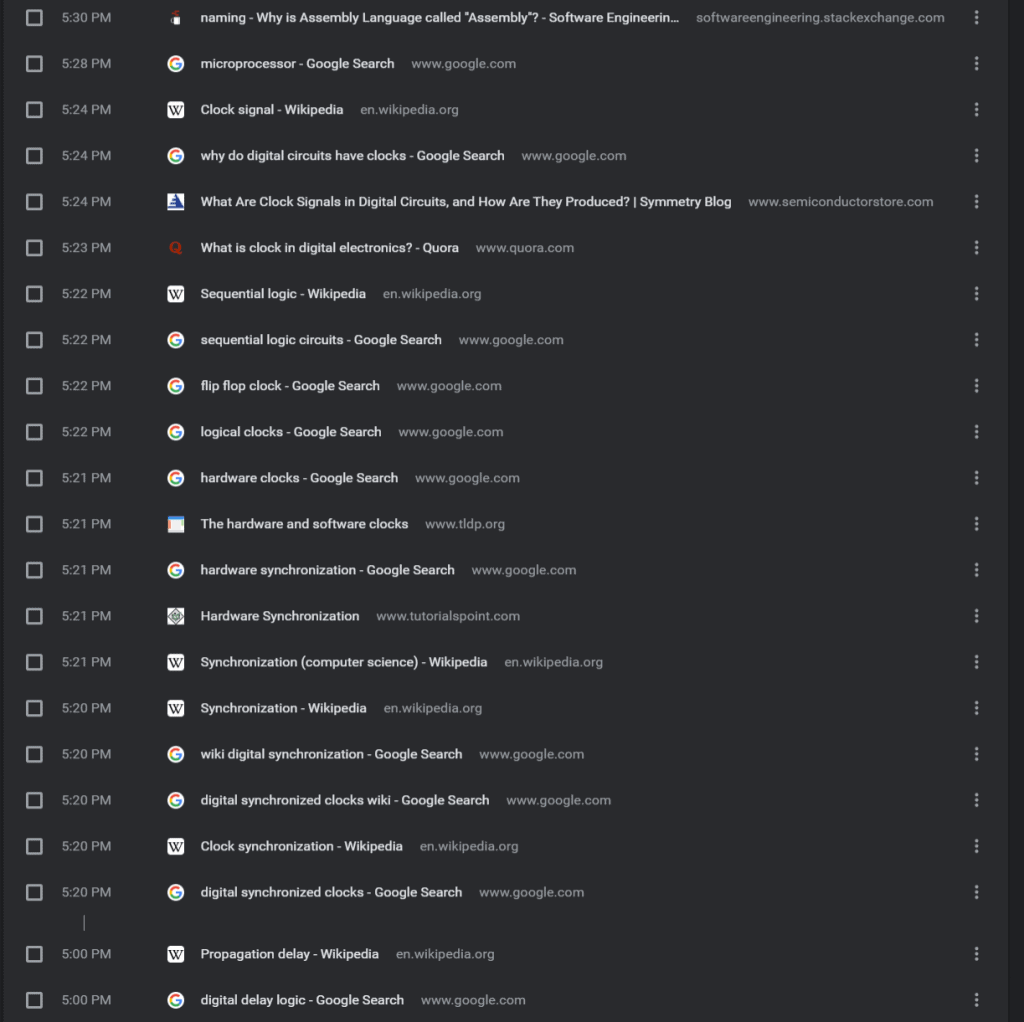

The last thing I’ll say is that I’m really no expert on the history. While I was putting this article together, I had a really tough time piecing together the order of events that led to modern computers. Honestly, I think my search history speaks for itself:

As a result, I apologize if there’s something in here that I didn’t get 100% correct. My goal at the end of the day was to provide a bit of context around computing before we dive into Python. At the very least, I hope you grew an appreciation for the field and how far we’ve come!

That said, I should say that I have a degree in Computer Engineering. So while my circuits knowledge is a bit rusty, I believe I did a decent job rehashing the basics. As we move forward, some of the concepts discussed here will still be relevant. For example, binary will creep up from time to time. Likewise, logic gates tend to lend themselves to software logic.

Up next, we’ll start talking about algorithmic thinking. Then, I think we’ll finally start talking about Python. Who knows? (Oh right, me!)

With that out of the way, all that’s left is my regular pitch. In other words, I hope you’ll take some time to support the site by visiting my article on ways to grow the site. There, you’ll find links to my Patreon, YouTube channel, and newsletter.

If none of those are your thing, I’d appreciate it if you took some time to keep reading:

Alternatively, here are some Python resources from the folks over at Amazon (ad):

- Effective Python: 90 Specific Ways to Write Better Python

- Python Tricks: A Buffet of Awesome Python Features

- Python Programming: An Introduction to Computer Science

Otherwise, thanks for stopping by! See you next time.

Recent Posts

It's July 2024, and I have three chapters of my dissertation drafted! Two more and we'll be ready to defend.

In growing the Python concept map, I thought I'd take today to cover the concept of special methods as their called in the documentation. However, you may have heard them called magic methods or even...