Now that we’ve gotten some of the important theory out of the way, we can revisit our old friend, the array. When we first started talking about arrays, there was this mention about them being a pretty simple data structure. That was more of a reference to how easy arrays are to work with syntactically. In reality, there’s quite a bit going on under the hood.

In this lesson, we’ll dive into the actual physical structure of an array in memory. Then we’ll start to talk about its use cases before ultimately tying its operations back to Big O.

Table of Contents

What is an Array?

An array is a contiguous section of memory that is broken up into blocks or elements. These elements are fixed size and can never change for the life of the array. Therefore, we can never change the type of data that we store.

As it turns out, this is especially true for the primitive types though we have a bit more flexibility with Objects. That’s because Objects are reference types, so they’re actually stored by a memory address. Java doesn’t have to do any sort of extra work to decide how large each element should be since memory addresses are a fixed size.

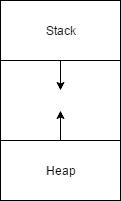

That brings us to this notion of the heap. Remember way back when we were talking about methods? In that lesson, we covered the stack. Well the heap is its counterpart. If method calls sit on the stack, then all these object references fill up the heap.

The heap and the stack sit at opposite ends of memory. As each end grows, the space between them shrinks. The stack cleans itself up as method calls exit, but the heap relies on garbage collection. As references disappear from the stack, the heap can start clearing out its objects. Keep that in mind as we start playing around!

Properties of Arrays

Due to its structure, the array has some pretty interesting properties.

Random Access

For one, data access is a constant time operation or O(1). If we recall from the last lesson, elements can be accessed by a simple calculation:

memory_address_of(element_n) = memory_address_of(element_0) + size_of_element * index_of(element_n)

We call this random access because it costs the same no matter which index we choose.

Linear Insert and Delete

Now things get a little complicated if we want to do any insertions or deletions. Since we can’t actually add or delete an index in the middle of an array, we have to move information around.

[4, 6, 8, 0] \\ Let's delete 4 [6, 8, 0, 0] \\ Now, let's insert 5 at index 1 [6, 5, 8, 0] \\ Elements had two shift around in both cases

In the case of an insertion, the best we can do is O(N). That’s because all of the elements to the right of the insertion point need to be shifted down by 1 index.

Naturally, deletions follow suit. Deleting an element requires all elements to the right of the deletion point to shift up by 1 index.

Fixed Size

Another important feature of arrays is that they’re fixed size. This becomes quite the issue if we want to append data to the array. This operation ends up being O(N) if we don’t have an explicit reference to the last empty element. Even if we do, we still end up with an O(N) operation because the array will eventually reach maximum capacity.

At this point, we either ignore the new value or we allocate a brand new array (usually much bigger than the first one). Then, we’re forced to copy every element from the original array into the new array. The cost of that operation is O(N), and it’s typically not something we want to do very often. Instead, we usually try to allocate a worst case size for the array. That way we know we’ll never exceed its bounds.

Search and Sort

Thanks to the power of random access, searching is pretty well optimized. If the array is sorted, we can actually request an element and find its index in O(log(N)). That’s because we can run a fun little algorithm called binary search. Imagine that we have an array as follows:

[3, 5, 6, 7, 11, 15, 18, 32, 33, 34, 79]

If we wanted to see if the array contained the value 33, we could find out by starting at one end and iterating through until we found it at index 8. However, because the array is already sorted, we can use a little trick called binary search.

With binary search, we take a stab at the middle index and determine which half to search next. This process continues until we pinpoint our requested value. The power of this algorithm comes from the fact that we kill off half the search space every iteration.

So in this case, binary search would start by grabbing index 6. At index 6, we have the value 15, so we know that 33 should appear on the upper half of the array. The next index we grab is 8 which yields our result. With this algorithm, we pinpointed our request in just two iterations as opposed to nine with a basic linear scan. Keep that in mind when we move on to linked lists.

Applications of Arrays

The power of arrays comes from their random access property while their crux is their fixed size property. As a result, typical applications of arrays include managing user input (see the Grader example mentioned before), sorting, matrix multiplication, and implementing other data structures (i.e. stacks and queues). Of course, there are plenty of other applications, but we’ll only dig into a few below.

Sorting

Let’s say we have some data that we want to sort, and we know how much data we have. Well, we can dump that data to an array and perform a sort on it:

int[] x = {1, 6, -5, 4, 17};

Arrays.sort(x);

The code snippet above leverages Java’s Arrays package which can be used to sort an array in place. Plenty of languages have a similar functionality like Python (where arrays are more like array lists):

x = [1, 6, -5, 4, 17] x.sort()

Regardless, sorting is a pretty normal application of arrays.

Implementing Other Data Structures

With arrays being first-class data structures in a lot of languages, they often serve as the building block for other data structures like stacks, queues, and array lists.

If we wanted to implement a queue using an array, we would need to track two points: front and back. The front pointer would change any time a user added an item to the queue while and back pointer would change any time a user removed an item from the queue.

Similarly, we could implement a stack using an array by adding the push and pop functionality. Here, we would only need to maintain a single pointer to the top of the stack.

In either case, we still have to consider the limitations of the size of an array when we’re using it to build other data structures. Naturally, that’s why we tend to opt for an array list which handles situations where we might run out of space.

Java Array Syntax

It wouldn’t be a Java tutorial if we didn’t at least look at some arrays in code. The following sections describe the basic syntax surrounding a Java array.

Creation

If we recall from the last lesson in the Java Basics series, then we’ll remember that an array can be defined as follows:

int[] myIntegerArray = new int[10];

In this code, we declare an array of integers where the maximum number of integers we can store is 10. However, that’s not the only way to create an array:

int[] myIntegerArray = {5, 10, 15, 20, 26};

In this example, we create an array of size 5 with some default values. If we choose to use the first example, Java is nice enough to default all of the values to 0.

Indexing

Now the syntax for accessing an element looks something like the following:

int value = myIntegerArray[3];

Here we are accessing the 3rd index in the array which actually points to what we would probably call the 4th element: That’s because array indices start at 0.

[index 0, index 1, index 2, index 3]

While that may seem a little confusing, it follows the random access equation directly. For example, if we want the memory address for the first element, we’ll use an index of 0 in the random access equation. That index allows us to eliminate the offset from the equation and simply return the starting memory address.

Be careful when indexing an array. Any index outside the bounds of it will result in an ArrayIndexOutOfBoundsException. In other words, Java will not allow us to poke at memory outside the bounds of what we said we needed.

Traversal

To scan over all the elements in an array, we can use the following loop:

for (int i = 0; i < myIntegerList.length; i++) {

System.out.println(myIntegerList[i]);

}

Here we can see that arrays have a property called length. This allows us to get the size of the array in constant time. Again, be careful. The length returns its actual size, so a length of 10 means there are 10 elements in the array. However, the index of the last element will be 9. Therefore, the following will always throw an error:

int value = myIntegerList[myIntegerList.length];

Insertion

Inserting an element in an array is as simple as:

myIntegerArray[5] = 17;

However, what happens if index 5 has data that we want to keep? As stated before, insertion is really an O(N) algorithm because we need to shift all of the elements down. The algorithm for insertion then might look something more like the following:

public static void insert(int[] myIntegerList, int position, int value) {

for (int i = myIntegerList.length - 1; i > position; i--) {

myIntegerList[i] = myIntegerList[i - 1];

}

myIntegerList[position] = value;

}

Deletion is almost exactly the same except we shift the remaining elements up.

Summary

Since this entire series is focused on data structures, we wouldn’t be doing it justice if we didn’t summarize the performance measurements for the various operations on an array.

| Algorithm | Running Time |

|---|---|

| Access | O(1) |

| Insert | O(N) |

| Delete | O(N) |

| Search (Unsorted) | O(N) |

| Search (Sorted) | O(log(N)) |

That’s it! Tune in next time to learn about linked lists. In that lesson, we’ll take a look at linked lists in almost the exact same way. Then at the end we’ll do a little compare and contrast for the two data structures we’ve learned so far.

Recent Posts

The infamous "name a woman" challenge has expanded into a speed run where you name 100 women. I took the challenge and failed miserably (hence why I'm hiding it behind the paywall).

It's July 2024, and I have three chapters of my dissertation drafted! Two more and we'll be ready to defend.