As someone who dabbles in data science, I’m aware that folks love their databases. However, most of the data I deal with is read-only, so I don’t really have a need for managing and updating records. As a result, CSVs are fine for me. Plus, I can share that data with others via GitHub.

With that said, I do find the structure of relational databases to be appealing. They allow you to store data in various tables that eliminate redundancy, reduce storage size, and combine easily. Therefore, it should be possible to replicate that structure with CSVs, which I recently did! This article shares that process.

Table of Contents

- CSV Data Organization

- Fixing the Redundancy

- Seeing Relational Data in Action

- Reaping the Rewards of Data Management

- But Wait, There’s More!

CSV Data Organization

Recently, I had sort of an epiphany about how we store data in tables. As someone who works with a lot of grade data, I’m used to seeing tables like this:

| Student | Homework 1 | Homework 2 | Homework 3 |

|---|---|---|---|

| Olivia Rodrigo | 4 | 7 | 4 |

| Hatsune Miku | 5 | 9 | 2 |

And, this kind of table is fine for the purposes of calculating grades. After all, the columns are useful for calculating descriptive statistics like averages and medians—assuming you know how much each assignment is worth. In addition, the rows are useful for calculating total grades per student—assuming you know the assignment groupings and weights.

Unfortunately, if you want to do anything more interesting, this kind of table is sort of annoying to work with. For instance, it’s hard to group assignments by type (e.g., homeworks, projects, etc.). Do you use the column names to guess at groupings? And when you get the correct groupings, how do you handle the different totals and/or weights per assignment?

To make matters worse, the structure of the table is very limited by its two axes: students and assignments. There is no way to add additional data to this table, like submission times, course numbers, etc. Ultimately, you end you end up having to do a lot of hardcoding and transforming of the data.

Instead, in my experience, it’s much better to structure your data by your unit of analysis, which in this case would be a single submission of an assignment. With that in mind, you can create a table that looks more like this:

| Student | Assignment | Score | Total | Group |

|---|---|---|---|---|

| Olivia Rodrigo | Homework 1 | 4 | 5 | Homeworks |

| Olivia Rodrigo | Homework 2 | 7 | 10 | Homeworks |

| Olivia Rodrigo | Homework 3 | 4 | 4 | Homeworks |

| Hatsune Miku | Homework 1 | 5 | 5 | Homeworks |

| Hatsune Miku | Homework 2 | 9 | 10 | Homeworks |

| Hatsune Miku | Homework 3 | 2 | 4 | Homeworks |

Now, while this table has significantly more redundant information in it, it’s also a lot easier to use for analysis. Want to compute the average score on homework 1? Filter out everything but homework 1 and compute the average using the score and total columns. Want to compute total scores for each student? Group the rows by student and sum the score column.

In other words, anything you could easily due with the previous table, you can easily do with this one. But, this one offers so much more in terms of extensibility. We could easily add something like submission time as another column, which offers even more in terms of analysis.

Fixing the Redundancy

For a lot of us, the data we collect is by hand, so it’s not exactly ideal to have a ton of redundant cells to enter into a table. For example, if every homework 2 is worth 10 points, it can be a pain to have to enter that repeatedly.

One solution is to convert that column to a table of its own. For example, we might make a table that looks like this:

| Assignment Name | Assignment Total | Assignment Group |

|---|---|---|

| Homework 1 | 5 | Homeworks |

| Homework 2 | 10 | Homeworks |

| Homework 3 | 4 | Homeworks |

Then, when we go to do our analysis, we reassemble the original table (more on that later). However, the way we typically do this is through ID numbers (usually called “keys” in database land). Here’s what that might look like:

| Assignment ID | Assignment Name | Assignment Total | Assignment Group |

|---|---|---|---|

| 1 | Homework 1 | 5 | Homeworks |

| 2 | Homework 2 | 10 | Homeworks |

| 3 | Homework 3 | 4 | Homeworks |

| Submission ID | Assignment ID | Student | Score |

|---|---|---|---|

| 1 | 1 | Olivia Rodrigo | 4 |

| 2 | 2 | Olivia Rodrigo | 7 |

| 3 | 3 | Olivia Rodrigo | 4 |

| 4 | 1 | Hatsune Miku | 5 |

| 5 | 2 | Hatsune Miku | 9 |

| 6 | 3 | Hatsune Miku | 2 |

Now, at first glance, this probably doesn’t seem like much of an improvement. In fact, there are more cells than we had before (i.e., 30 before and 36 now). However, the new setup scales so much better. For example, 20 submissions previously would have led to 120 cells (i.e., 20 submissions * 6 columns) while 20 submissions now would be just be 92 cells (i.e., 20 submissions * 4 columns + 3 assignments * 4 columns).

The other major benefit of the change is the old “single point of control” principle. Now, if we want to add any information to a particular assignment, we can do that at exactly one point in the entire “database.” No need to update every instance of that value when it’s only stored in a single location.

Seeing Relational Data in Action

By no means am I a database expert or a data analyst. That said, I wrote this article because I recently did a cool thing with my grade data, and I wanted to share part of the process. So, without further ado, let’s see the concepts above in action.

To start, I just want to share what some of my data looks like. For example, here’s my grading history CSV:

>>> grades_df.head() Section ID Student ID Assignment ID Grade Total 0 26319 1 1 2.00 2.0 1 26319 1 2 2.00 2.0 2 26319 1 3 2.00 2.0 3 26319 1 4 2.00 2.0 4 26319 1 5 2.00 2.0

As you can see, there are three IDs, one of which connects to the following assignment table:

>>> assignments_df.head() Assignment ID Assignment Group ID Assignment Name 0 1 1 Homework 1 1 2 1 Homework 2 2 3 1 Homework 3 3 4 1 Homework 4 4 5 1 Homework 5

And as promised, these two tables are comically easy to combine:

# Dumped the following line to a CSV # >>> grades_df.merge(assignments_df, on="Assignment ID").head() Index,Section ID,Student ID,Assignment ID,Grade,Total,Assignment Group ID,Assignment Name 0,26319,1,1,2.00,2.0,1,Homework 1 1,26319,1,2,2.00,2.0,1,Homework 2 2,26319,1,3,2.00,2.0,1,Homework 3 3,26319,1,4,2.00,2.0,1,Homework 4 4,26319,1,5,2.00,2.0,1,Homework 5

That looks quite like the original table. However, you might notice that I have assignment group IDs as well, which are stored in the following table:

>>> assignment_groups_df.head() Assignment Group ID Assignment Group Name Assignment Group Weight 0 1 Homeworks 4 1 2 Projects 15 2 3 Closed Labs 4 3 4 Quizzes 4 4 5 Midterm Exams 40

And again, here’s what the combined table looks like:

# Dumped the following line to a CSV # >>> grades_df.merge(assignments_df, on="Assignment ID").merge(assignment_groups_df, on="Assignment Group ID").head() Index,Section ID,Student ID,Assignment ID,Grade,Total,Assignment Group ID,Assignment Name,Assignment Group Name,Assignment Group Weight 0,26319,1,1,2.00,2.0,1,Homework 1,Homeworks,4 1,26319,1,2,2.00,2.0,1,Homework 2,Homeworks,4 2,26319,1,3,2.00,2.0,1,Homework 3,Homeworks,4 3,26319,1,4,2.00,2.0,1,Homework 4,Homeworks,4 4,26319,1,5,2.00,2.0,1,Homework 5,Homeworks,4

Ultimately, I ended up making a handful of tables, one for each of the following:

- Submissions

- Assignments

- Assignment Groups

- Sections

- Courses

In the end, I end up with a particularly massive table that let’s me easily perform all the analyses I want. For example, here’s what the final list of columns looks like for each submission:

Index(['Section ID', 'Student ID', 'Assignment ID', 'Grade', 'Total',

'Assignment Group ID', 'Assignment Name', 'Assignment Group Name',

'Assignment Group Weight', 'Course ID', 'Year', 'Season', 'Course Type',

'Section Days', 'Section Start Time', 'Section End Time',

'Section Building', 'Section Room Number', 'Educator Title',

'Educator Role', 'Course Department', 'Course Number', 'Course Name'],

dtype='object')

I’ll share some of the wonderful plots below!

Reaping the Rewards of Data Management

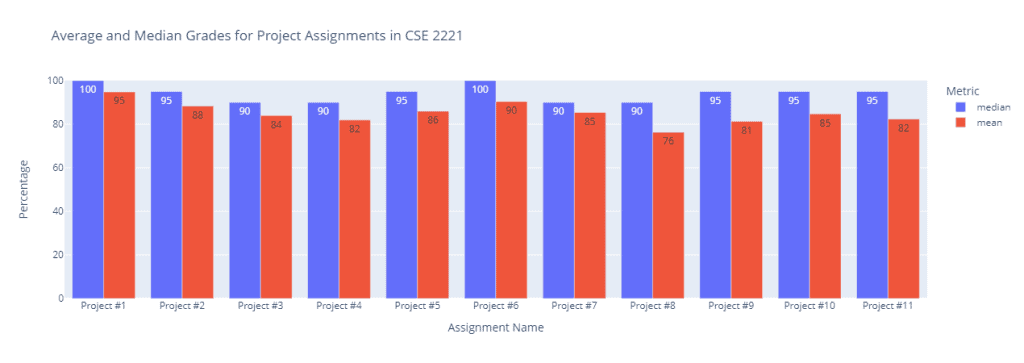

While data analysis isn’t a strength of mine, I quite like data visualization. Most of the time, I use Plotly for that to build nice little web-based dashboards. I’m in the process now of migrating all of the old data to this new format, and it has allowed me to write some comically easy code. For instance, here’s a code segment that generates a bar chart of the means and medians for a particular assignment group:

def render_assessment_calculations_figure(education_data: str, assessment_group_filter: str, course_filter: int):

"""

Plots a breakdown of the averages and medians per assessment for a specific

course and assessment group.

:param education_data: the jsonified education dataframe

:param course_filter: the course ID

:param assessment_group_filter: the assessment group # TODO: make this an ID for consistency

:return: the assessment calculations figure object

"""

# Convert the data back into a dataframe

education_df = pd.read_json(StringIO(education_data))

# Filter

education_df = education_df[education_df["Course ID"] == course_filter]

education_df = education_df[education_df["Assignment Group Name"] == assessment_group_filter]

education_df = education_df[education_df["Grade"] != "EX"]

education_df = education_df[education_df["Total"] != 0]

# Type cast

education_df["Grade"] = pd.to_numeric(education_df["Grade"])

education_df["Total"] = pd.to_numeric(education_df["Total"])

# Precompute columns

education_df["Percentage"] = education_df["Grade"] / education_df["Total"] * 100

# Perform analysis

to_plot = education_df.groupby("Assignment Name")["Percentage"].aggregate({"mean", "median", "count"})

# Helpful variables

course_code = f'{education_df.iloc[0]["Course Department"]} {str(education_df.iloc[0]["Course Number"])}'

assignment_types = education_df.sort_values("Assignment ID")["Assignment Name"].unique()

# Plot figure

assignment_calculations_fig = go.Figure(layout=dict(template='plotly'))

assignment_calculations_fig = px.bar(

to_plot,

labels={

"index": "Project Name",

"value": "Percentage",

"variable": "Metric",

"count": "Count"

},

barmode='group',

text_auto=".2s",

title=f"Average and Median Grades for {assessment_group_filter} in {course_code}",

category_orders={

"Assignment Name": assignment_types

},

hover_data=["count"]

)

assignment_calculations_fig.update_yaxes(range=[0, 100])

return assignment_calculations_fig

It probably looks like a lot of code, but it’s surprisingly tame. We take some inputs, do a bit of filtering, compute a few values, and then toss the resulting table to plotly. The result is a graph that looks something like this:

Compare that to the previous code, which is sort of clunky and hardcoded:

@callback(

Output(ID_CSE_2221_PROJECT_GRADES_FIG, "figure"),

Input(ID_CSE_2221_GRADE_DATA, "data")

)

def render_project_calculations_figure(jsonified_data):

df = pd.read_json(StringIO(jsonified_data))

return create_assignment_fig(df, "Project", 10)

@callback(

Output(ID_CSE_2221_HOMEWORK_GRADES_FIG, "figure"),

Input(ID_CSE_2221_GRADE_DATA, "data")

)

def render_homework_calculations_figure(jsonified_data):

df = pd.read_json(StringIO(jsonified_data))

return create_assignment_fig(df, "Homework", 2)

@callback(

Output(ID_CSE_2221_EXAM_GRADES_FIG, "figure"),

Input(ID_CSE_2221_GRADE_DATA, "data")

)

def render_exam_calculations_figure(jsonified_data):

df = pd.read_json(StringIO(jsonified_data))

return create_assignment_fig(df, "Exam", 100)

def create_assignment_fig(grade_data, assignment, total):

assignment_data = [name for name in grade_data.columns if assignment in name]

assignment_calculations = grade_data[assignment_data].agg(["mean", "median"]).T

assignment_calculations.rename(columns={'mean': 'Average', 'median': 'Median'}, inplace=True)

assignment_calculations_fig = go.Figure(layout=dict(template='plotly'))

assignment_calculations_fig = px.bar(

assignment_calculations,

labels={

"index": "Project Name",

"value": f"Grade/{total}",

"variable": "Metric",

"mean": "Average",

"median": "Median"

},

barmode='group',

text_auto=".2s",

title=f"Average and Median {assignment} Grades".title()

)

return assignment_calculations_fig

For starters, we could really only handle one assignment type at a time. The assignment type had to be hardcoded, which I really don’t want to have to do in the source code. The assignment type should be something I get from the data itself, not imposed during analysis. This becomes particularly problematic because it’s not scalable. I’d have to litter my code with constants every time I want to add an assignment type or course. Similarly, filtering becomes a nightmare because we have to filter the column names by hand using a list comprehension. Now, I can just filter on the assignment type column.

To make matters worse, the totals were hardcoded as well, which was an assumption that wasn’t always true. This became a bigger problem for me recently because the learning management system I use recently switched from tracking attendance as a percentage to tracking it as a total number of days. I would either have to manually compute the percentage before building my CSVs or just assume some total. Now, I can just use the total column, which is individualized to submissions.

Meanwhile, if I wanted to know the counts of each assignment—which isn’t done here—I would have to guess by multiplying the height of the dataframe by its filtered width. So it worked, but it was clunky. Now, I can literally just get the length of the filtered dataframe, which gives me an exact number.

Also, and this is just a personal gripe of mine, there just wasn’t any way to get more interesting data other than descriptive statistics. There was no way to map submission times, instructors, graders, or any other useful information to assignments. We had grades and contrived categories of assignments. That’s it!

But Wait, There’s More!

In order to get the data in the form I have it in now, I’ve had to do a bit of cleaning myself. This is fine, but it means I have to do some work up front with the data to get it in a form that’s nice for analysis.

Recently, however, I learned that Canvas, the LMS we use, has an API. So, I think pretty soon I should be able to generate these relational tables automatically. Perhaps, if I figure that out, I’ll share my process!

In the meantime, if you liked this article, I have a lot more where that came from:

- How to Plot Semesters Using Pandas and Plotly

- How to Use Python to Build a Simple Visualization Dashboard Using Plotly

- How to Plot a Line Using Matplotlib in Python: Lists, DataFrames, and More

Likewise, if you liked these articles and want to support the site a bit more, check out my list of ways to grow the site. Otherwise, take care!

Recent Posts

It's July 2024, and I have three chapters of my dissertation drafted! Two more and we'll be ready to defend.

In growing the Python concept map, I thought I'd take today to cover the concept of special methods as their called in the documentation. However, you may have heard them called magic methods or even...